The phenomenon "Artificial Intelligence" (AI) is discussed under many different terms today. For example, there is talk of Artificial Intelligence (AI), Machine Learning, Neural Networks, Representation Learning, Natural Language Processing (NLP) or Deep Learning is the term used. This sometimes leads to great confusion and lack of clarity as to what exactly is meant by each of these terms and what practical benefit the respective methods actually have.

For companies in particular, however, it is crucial to know what opportunities arise for them from the respective methods. In view of the enormous growth potential associated with AI alone, companies should address this topic.

Inhaltsverzeichnis

The dream of AI

Creating intelligent machines that can behave and think like humans is an old dream of mankind. Ancient myths, such as that of the Golem, already tell of this, and Leonardo da Vinci also tried to design intelligent machines.

At Age of digitalisation we have reached the point where not only mechanical processes that were previously carried out by humans are now carried out by machines. Today, it is possible to digitally map mental procedures and processes. Even more: with the help of intelligent Algorithms high-performance computers are capable of producing results that are beyond the capacity of humans.

Left: The Golem figure in a scene from the silent film The Golem. How he came into the world (1920) by Paul Wegener. Source: © Deutsches Filminstitut, Frankfurt am Main, Paul Wegener estate - Kai Möller collection. Right: Mechanical robot knight based on an idea by Leonardo da Vinci (c. 1495). Replica from the 17th century. © Photo by Erik Möller. Leonardo da Vinci Exhibition Man - Inventor - GeniusBerlin 2005.

The generic term: Artificial Intelligence (AI)

Exemplary for the Status quo of artificial intelligence are the victories that programmes like Deep Mind, IBM's Watson or Google's AlphaGo have won in games like Chess, Jeopardy! and Go, the "most difficult game in the world". Artificial intelligence (AI) is the generic term used to describe phenomena of this kind.

The beginnings of AI research date back to the 1950s back, in particular to the considerations that Alan Turing in his essay "Computing Machinery and Intelligence" in 1950. The Turing Test, named after him, also goes back to Turing. It is used to distinguish whether a machine has an equivalent thinking capacity to a human being or not.

However, the generic term artificial intelligence needs to be differentiated more precisely. There is a fundamental difference between an AI that is capable of beating a human at chess or Go and an AI with which one can converse naturally as if it were a human. Turing was only concerned with the latter.

But when we talk about certain algorithms as AIs today, we are usually talking about a "slimmed-down" version. AIs in this sense are intelligent programmes that perform specific, complex taskswhich, under normal circumstances, can be managed by a high level of intelligence is necessary, sometimes even better than humans master.

Artificial neural networks

From today's perspective, the first experiments in which "intelligent machines" were modelled on humans may seem naïve. But strictly speaking, today's developments in the field of AI are also based on the findings about humans as intelligent beings. The only difference: the model is no longer based on anatomy, but on the human Brain and neuronal processes. Like neural networks, artificial neural networks consist of nodes, also called neurons.

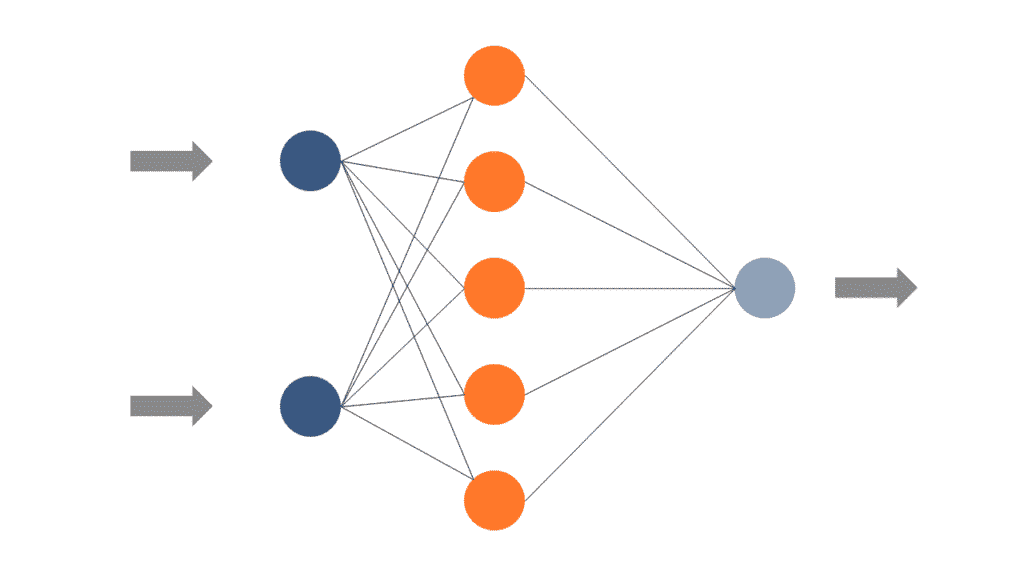

The simplified form of an artificial neural network with an input layer on the left, an activity layer in the middle (also called a "hidden layer") and an output layer on the right.

The, also Input units The neurons of the input layer are used to record environmental information such as measurement data. In medicine, for example, this could be data about patients such as weight, body temperature, age, etc. The neurons of the input layer are used to record environmental information. Now the evaluation begins, at the end of which, at the level of the Output layer a result - in this case "sick" or "healthy".

The Evaluation of the dataThe connection between the neurons is determined by the edges with which the individual neurons are connected to each other. Each value is assigned a certain weight that determines the strength of the connection between the neurons. How strongly a neuron is activated depends strongly on the respective selected Classification procedure (e.g. delta rule or backpropagation). These can also be described as "Learning rule" The term "training" is used to refer to the training with which a neural network is first trained before it can be used in practice.

In our blog article artificial neural networks are the success to machine learning you can learn even more about this topic.

Machine Learning

At Machine Learning This is an umbrella term that describes a Class of learning algorithms denotes which can learn from "experience. Similar to us humans, machines can learn in this way from a large number of example cases and abstract a general rule. After a learning phase, these insights can be applied to real cases again. An important area of application for machine learning algorithms is, for example, the detection of credit card fraud.

The possible applications of machine learning are enormous. To name just a few examples:

- Regression analysis,

- Decision tree,

- Support vector machine or

- Deep Learning.

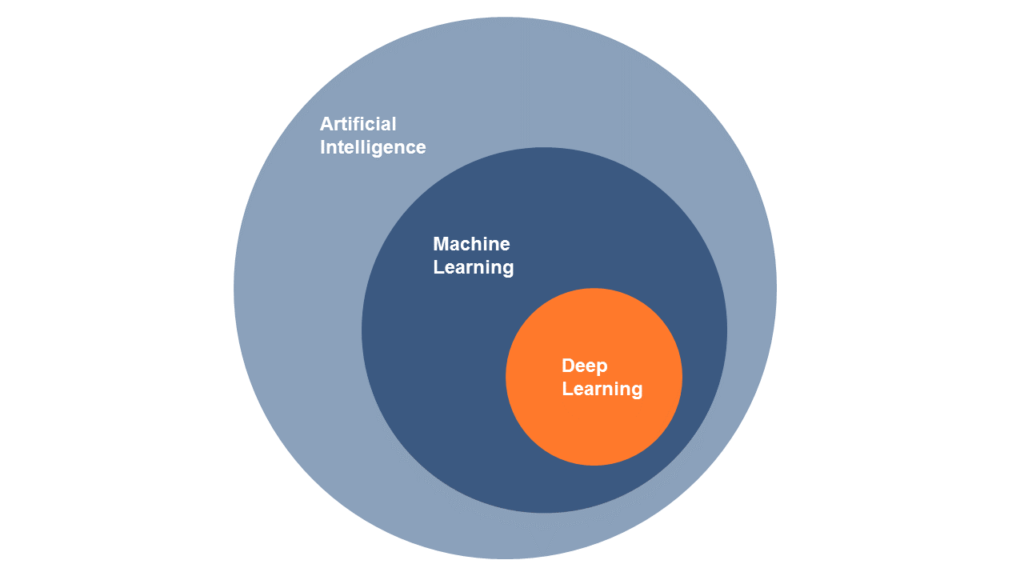

The last example also shows why there is often conceptual confusion when talking about artificial intelligence. Because not everything that is Artificial Intelligence must necessarily be Machine Learning, and not every machine learning method is automatically Deep Learning, but the reverse is true. The following illustration makes this connection clear once again:

Source: Own representation based on codesofinterest.com

Even machine learning is not always machine learning. Within machine learning there is a Range of learning methods. The two best known are Supervised Machine Learning and Unsupervised Machine Learning. Both serve different purposes and differ fundamentally. In supervised machine learning, a so-called "teacher" gives feedback to the machine learning algorithm during the training phase. With the feedback, an algorithm learns whether the respective result is correct or incorrect.

In Unsupervised Machine Learning, there is no such teacher. Rather, the algorithm is supposed to recognise rules or patterns in data on its own. This is why unsupervised machine learning is used to explore specific data sets, while supervised machine learning is used for more concrete questions.

If you want to know even more about the concrete application possibilities of machine learning, also read our article on "Machine Learning in Industry 4.0.

Deep Learning or Deep Neural Networks

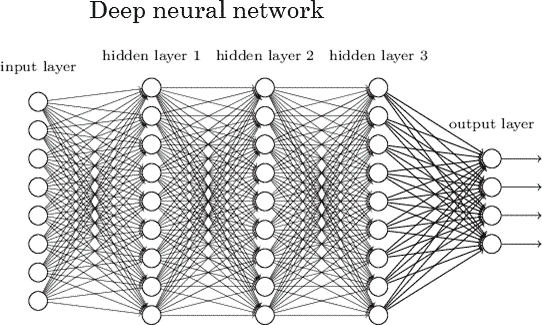

Deep Learning ("deep learning") is a special class of optimisation methods of artificial neural networks. This is why they are sometimes called "deep neural networks". The main difference is the complexity of the intermediate layers, the so-called "hidden layers".

Deep Learning has become one of the central development drivers in the field of artificial intelligence in recent years for two reasons: Firstly, because Deep Learning achieves particularly good results when large amounts of data (Big Data) are available with which a network can be trained. And secondly, because deep-learning algorithms have made intellectual and mental processes representable that were long assumed to be reserved for humans.

Source: rsipvision.com

In a deep learning algorithm or deep neural network, there are numerous hidden layers between the input and output layers.

Two of the most prominent examples are Speech and face recognition. Siri, Cortana & Co, Chatbots or the new Google Image Search are examples of applications that would not exist without Deep Learning. The algorithms of chatbots, for example, learn with every question they are asked and thus improve themselves. It is precisely this learning ability of Deep Learning algorithms that distinguishes them from "normal" artificial neural networks.

Evolutionary Algorithms (EA)

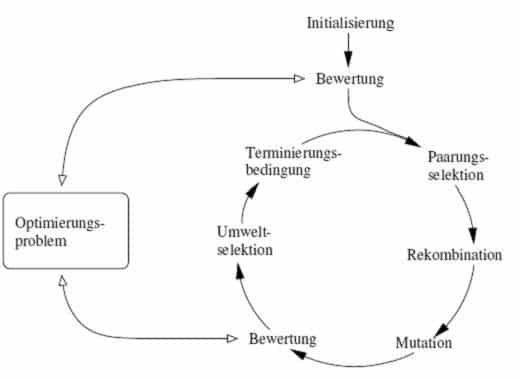

Another class of intelligent algorithms is also trying to learn from nature how intelligent, adaptive systems work. In this case, however, the model is not the brain, but the Evolution. As in the case of biological evolution, the evolutionary algorithms different generations on top of each other - in this case generations of solution approaches. In keeping with the motto "survival of the fittest", each candidate solution is evaluated and, depending on the evaluation, selected to be used in the next generation in a mutated or recombined form to be applied again.

Graphical representation of the selection mechanism leading to the evolutionary development of a solution approach. Source: schneider-m.com

The more generations of algorithms are created in this way, the better the solution becomes. For companies, EAs are particularly useful in Optimisation tasks interesting as in the development of new products. Financial market products can also be optimised with EA for maximum profit and minimum risk. This can be done, for example, by simulating portfolios with different compositions in order to determine the best possible composition.

How intelligent will machines become in the future?

With all the achievements and more than impressive feats that AI has already produced, the legitimate question is what the future will bring. We are still far from creating an AI that comes close to the complexity and universal applicability of humans. Rather, it is special tasks in which machines are superior to humans.

One crucial ingredient that will determine whether we see this form of AI passing the Turing test in the near future are Quantum computer. The human brain will also be able to claim another advantage for a long time to come: As soon as possible, no artificial system will be able to perform computing operations in such an energy-efficient way.

Still a dream of the future, but already developed in its infancy: Machines that, thanks to "Affecitve Computing" Understanding feelings.

Another quantum leap in the development of AI will be capability, To pass on what we have learned. Let's assume that a robot equipped with AI has learned how to distinguish screws from nails. This knowledge would also be useful for many other robots working in similar situations.

If this knowledge were easily transferable, not every intelligent system would have to learn every single ability, but could simply call up certain abilities, similar to the film Matrix. This vision of the future depicted in the film could soon become reality, at least for machines.

0 Kommentare