At first it sounds trivial when it is emphasised that good data quality is crucial for companies and organisations. On the one hand, it can ensure the reliability of processes. On the other hand, errors in data inventories can actually result in enormous financial follow-up costs under certain circumstances.

Quite independently of this, a bad Data quality to the fact that Data no longer correctly represent reality and thereby increase their Value lose. Only if optimal data quality is ensured can models make accurate statements about certain conditions, for example in the networked factory or in other operational areas.

Reading tip: Read the following article to learn how to every factory can become part of Industry 4.0.

Inhaltsverzeichnis

Definition of data quality and why it is important

For decision-makers, data quality is important because it relies on Basis from Data analyses Making decisions or Market opportunities rate. Data quality and decision quality are therefore directly related. Data quality can be defined as follows: Data quality is characterised by the fact that data must be able to fulfil the purpose in a certain context.

Five main criteria can be identified that interact to ensure data quality: Correctness, Relevance and Reliability of all data, as well as their Consistency and lastly their Availability on different systems.

In addition to this rather narrow definition, a whole range of terms can be named that also influence data quality:

- Accuracy

- Completeness

- Topicality

- Relevance

- Additional benefits

- Consistency across multiple sources

- Representation

- Interpretability

- Comprehensibility

- Accessibility

- Reliability of the system

We have bundled this variety of aspects into the 5 most important measures that, in our experience, lead to better data quality.

1. introduce data catalogue

Data quality can be ensured even before the actual process of data collection. Through the pre-conceptualised definition of a Data catalogue of properties - so-called metadata - with which all data objects are equipped. This data catalogue is an important means of identifying data later for analysis and at the same time serves the purpose of checking data for their Completeness and their Consistency ensure

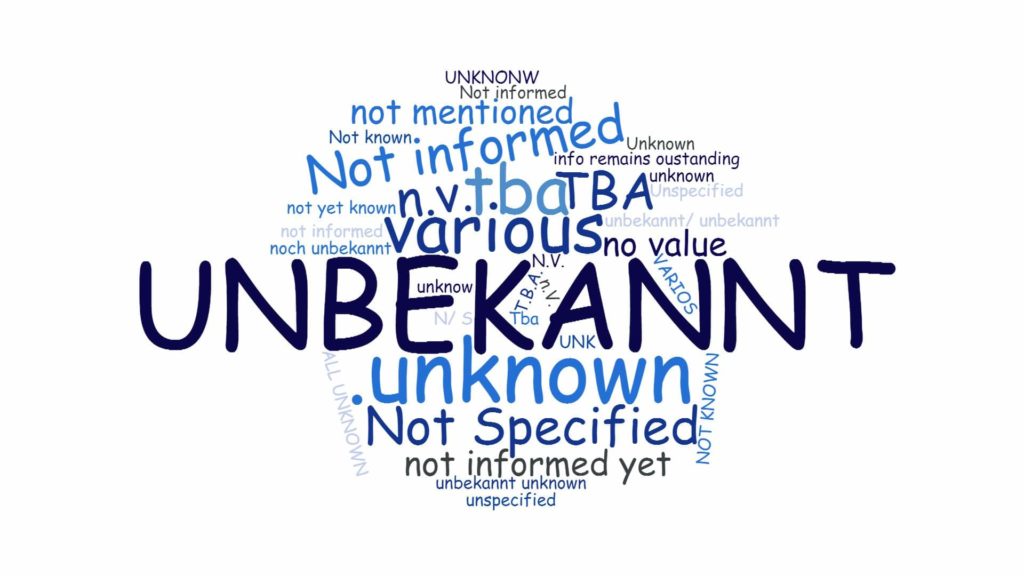

The entire data stock can be structured uniformly in this way and protected from both redundancies and duplicates. The following figure shows the Visualisation of a data setwhich was created without a data catalogue. All attributes that were not present were filled in the data set with a wide variety of values such as "unknown", "unknown", "various" or "N/S". In a data catalogue, a uniform value such as "unknown" would have been used for all unknown values.

2. the first-time-right principle

Incomprehensible, inaccurate or incomplete entries are a source of errors that quickly multiply and are very tedious and time-consuming to correct. Instead of checking the correctness of data afterwards, it is therefore advisable to ensure that all data is correct directly during the creation or collection. This measure is also called "First-Time-Right Principle". Correctness must be ensured directly during data entry. In sensitive cases, this rule can be changed by means of the Four-eyes principle complete.

The first-time-right principle applies to all employees, but also to automatically collected data collection systems that are incorrectly calibrated or provide false readings for other reasons. Wherever data is created, the responsible persons must ensure that data is stored correctly the first time. The aim of the First-Time-Right principle is to Topicality, the Completeness and the Scope of information from Ensure data.

3. data cleansing & data profiling

The phenomenon is also known from private systems: data rubbish slows down systems and processes. That is why data media must be cleaned regularly. A number of applications and tools are available for this purpose. Algorithms are available. These check data types and convert them, recognise and delete duplicates or complete incomplete data. Data Cleansing is hardly ever done manually any more due to the large data stocks - even if this happens again and again in certain cases.

With already existing programmes and algorithms, the clean-up can be carried out efficiently. Within the framework of the Data profiling data is systematically examined for errors, inconsistencies and contradictions. The goals of data cleansing and data profiling are:

- Contradictions Avoid within datasets

- Interpretability of the data received

- Danger of manipulation Prevent the data

- Integrity Ensure the data

4. data quality management for permanent access to data

A systematic Data quality management contributes permanently to maintaining a high level of data quality. One strategic option that is available for data quality management is: Data governance. There are many different definitions of the term data governance.

The important aspect for this context is that access to all relevant data must be permanently ensured. This is achieved by clarifying responsibilities and assigning access rights, which must be considered and up-to-date. The goal of data quality management is always to ensure the Ensure system access and also the Ensure system security. Data quality management therefore also includes the Integration of all datain other words: the dismantling of data silos.

Reading tip:Read our basics article to find out why Data analytics or data science is a key to digital transformation.

5 The Closed Loop Principle

Ensuring optimal data quality is not a singular challenge, but an iterative process that must be firmly anchored in companies and organisations. Because this process is repeated over and over again, it is also referred to as the Closed-loop principlethat underlies it. Optimising data quality is therefore a Dynamic, continuous improvement processwhich should be integrated into all central business processes. To ensure consistently high quality, it is advisable to hold training sessions and workshops at regular intervals to ensure sustainable success.

On the way to better data: Identifying and assigning responsibilities

One of the crucial questions leading to better data quality is: "Who is responsible for the individual measures?" The driver for data quality is often IT compliance or process integration, because it is precisely here that compliance with existing laws such as data protection law and adherence to standards is relevant. Companies that are looking for a "holistic" answer to the increasingly complex challenges can find a solution in the context of a Data Custodianship Define different roles at all levels and clearly distribute responsibilities.

This step alone is worthwhile because the causes of poor data quality, apart from the lack of responsibilities, are often incorrect entries or double entries (duplicates), regional differences in interpretation or redundant information, i.e. they can happen at the most diverse levels.

In order to define tasks more clearly Data quality initiatives be carried out in which data quality-critical areas are identified and data quality review processes are defined. This effort is worthwhile against the background of the overarching goal of a better data quality: The Increasing the return on investment and the long-term preservation of the Value of the data.

0 Kommentare