With the EU AI Act, the Artificial intelligence and AI governance are more in the focus of political and economic decision-makers in Europe than ever before. In April 2021, the EU Commission, within the framework of the EU AI Strategy, a draft on the Artificial Intelligence Act (EU AI Act) and thus set a first legal framework proposal for the ethical handling of AI systems. For users, the controversially discussed draft regulation holds both opportunities and risks. With this Post we would like to give a concise overview of the thematic priorities and challenges of the EU AI Act.

EU AI Act

Artificial intelligence (AI) is one of the defining technologies of the present - and the future. Already today, algorithmic decision-making processes play a significant role in the everyday lives of European citizens: when Googling, when managing commuting, in the Instagram feed, or to find out about the creditworthiness of potential customers at the click of a mouse. Public institutions are also making use of AI systems. Recently, for example, the use of so-called Job centre algorithms, or a system on the prediction of benefit abuse for great indignation and the demand for legal foundations.

One thing is clear: in the application of AI systems, the line between (ethically) right and wrong is often not clear-cut - the specific use case is decisive. This goes hand in hand with the growing need of many interest groups from business and the sciences for a normative framework and legal certainty. Because it is also clear that the enormous potential of AI systems wants to be used.

With the EU AI Strategy incl. the AI Act, the EU is responding to the technical achievement of complex data models and AI as well as the socio-economic challenges that are emerging with it. At the same time, the AI Regulation is intended to set the course for the future of digitalisation in Europe and become an innovation driver for a globally active "AI made in Europe".

Inhaltsverzeichnis

EU AI Act: The focus of the regulatory proposal

What is the objective of the AIA?

The proposed AI regulation aims to ensure that Europeans can trust what an AI produces and guarantee that AI systems will give us human beings do no harm. The existing fundamental rights and the values of the Union are to be preserved and effective enforcement of the applicable law is to be strengthened. Consequently, people and legal certainty are the focus of the regulatory proposal. This Legal and ethical requirements are expected to have a positive impact in particular on investment promotion in AI and the development of the EU internal market.

What's behind it?

The Trusted AI Guidelines are at the heart of the proposed EU AI law and were drafted by an appointed AI Expert Group (HLEG) developed. The framework is based on the fundamental principles of legality, ethical standards and robust AI systems. In order to link law and ethics, the ethical principles of the guidelines were derived from fundamental rights and incorporated in seven key requirements which developers and users of AI systems should fulfil.

- Human action and supervision

- Diversity, non-discrimination and fairness

- Accountability

- Transparency

- Data protection and data governance

- Ecological and social well-being

- Technical robustness and safety

At best, these requirements should already be met in the design phase of AI systems through specific questions be taken into account and operationalised.

How is AI defined?

The question of what AI is all about is certainly not new and is far from being universally clarified. However, this discourse around the AI Act is experiencing a new explosiveness. In particular, the insufficient definition of AI systems was a central point of criticism from business and science. This is because the term artificial intelligence in the 2021 bill could refer to almost all forms of software.

The new compromise proposal of the Czech EU Presidency attempts to clarify the distinction between classical software systems more adequately. The current proposal provides that systems developed with the help of machine learning (ML) approaches as well as logic- and knowledge-based approaches are to be classified as AI systems.

In the full text this reads as follows:

'artificial intelligence system' (AI system) means a system that is designed to operate with elements of autonomy and that, based on machine and/or human-provided data and inputs, infers how to achieve a given set of objectives using machine learning and/or logic- and knowledge-based approaches, and produces system-generated outputs such as content (generative AI systems), predictions, recommendations or decisions, influencing the environments with which the AI system interacts.

Compared to the previous proposal, statistical approaches, Bayesian estimation, search and optimisation methods were excluded. This would have meant a very wide definition of AI and would hardly have been possible to implement in reality.

What is the current status of the legislative process?

On 11 November 2022, the final AI Draft Regulation in its 5th version submitted to the EU Parliament. On 6 December 2022, the further procedure will be discussed at the Telecommunications Council. The EU Council and the EU Parliament must reach an agreement in order for the draft law to enter into force. These negotiations are expected to last until the beginning of 2023.

If you do not want to miss anything about the current legislative process, you can join the Legislative Train Schedule follow, every now and then on our data.blog drop by or visit our Newsletter subscribe. This topic will certainly be with us for some time.

Who is affected?

As a general rule, if an AI system can harm a human being within the European Union, the requirements of the EU AI Act come into force.

Specifically, as soon as an AI system is operated, distributed or used in the EU market (i.e. even if the operator, provider or developer are established outside the Union), the AI Act and the possible penalties apply. According to the current version, non-compliance with the requirements can result in penalties of up to 30,000,000 euros. € or 6% of turnover come.

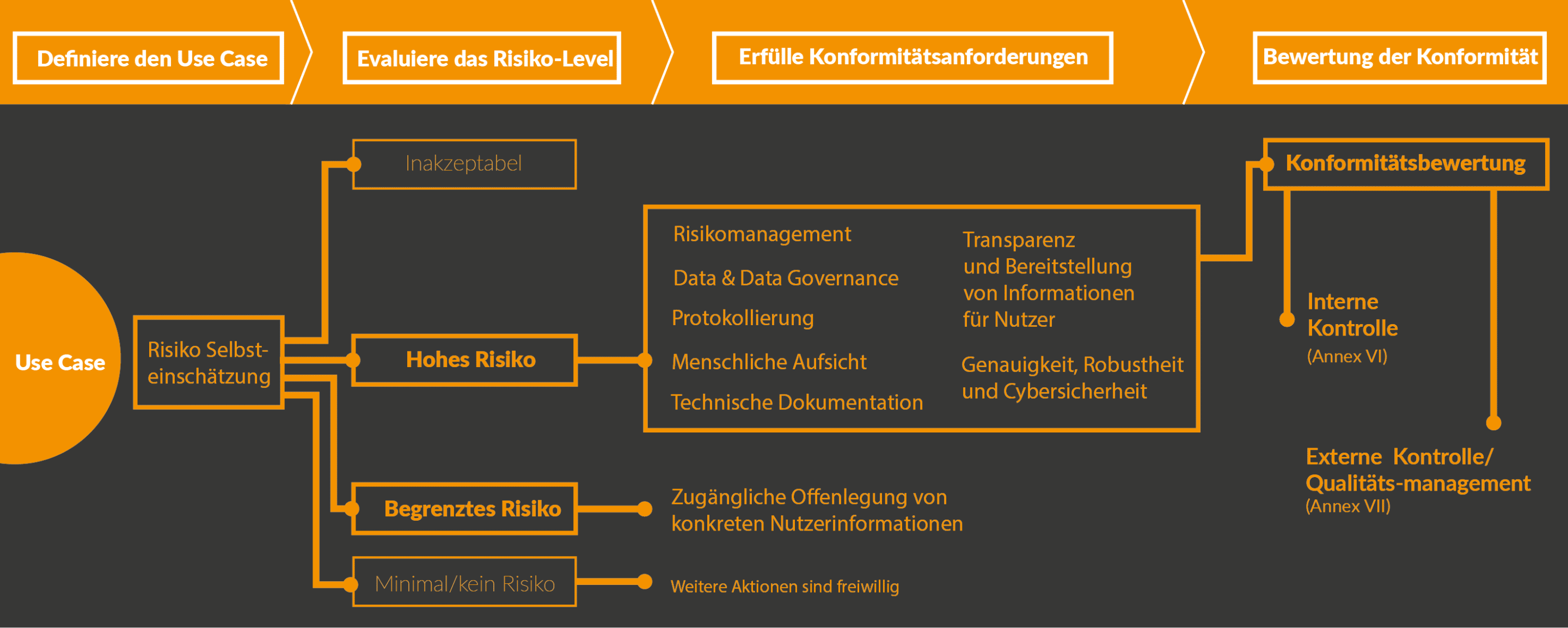

How should the AI Act be implemented?

In the draft AI Regulation, the legislator defines clear requirements and obligations for developers, operators and users of AI systems. At the heart of it all is a compliance process along the life cycle of AI use cases. This can vary depending on the defined risk classes. Risic classes? Exactly, because the EU AI Act essentially follows a risk-based approach. This means that AI use cases must be evaluated within a self-assessment and can then be assigned to risk categories. The law provides for a permanent evaluation of the use cases according to the current state of technology. Based on this, concrete requirements arise for the providers, operators and or users of the AI system.

Accordingly, AI applications can be divided into four different risk categories:

- AI systems with unacceptable risk

- High-risk AI systems

- AI systems with limited risk

- AI systems with minimal or no risk

AI systems with unacceptable risk

According to Article 5 of the EU AI Act, AI applications with unacceptable risk are to be banned. This category includes all systems that can contribute to people suffering psychological or physical harm. This includes, for example, technologies that use manipulative methods to influence human consciousness. Similarly, the use of AI systems by public authorities to assess the trustworthiness of citizens, with a resulting worse or better position, falls under this risk category. The EU Commission is thus putting a stop to the implementation of a social credit system based on the Chinese model.

High-risk AI systems

The most significant area of application of the EU AI Act is the so-called high-risk AI systems. High-risk AI systems are defined as those used in the following areas: real-time biometric remote identification, security components of critical infrastructure (except in the cybersecurity area), private and public services (e.g. credit assessment of individuals), education, manufacturing of security components of products (e.g. in AI-assisted surgery), law enforcement and democratic processes, asylum and migration, and human resource management.

All these applications can pose a major risk to human health and public safety. Therefore, the EU Commission insists on strict governance requirements here. According to the EU AI Act, the most important requirements include the implementation of a risk management system that continuously and iteratively evaluates the conformity of AI systems. For providers, this initially means that an initial audit is required even before market launch. Once a new application is on the market, it will be continuously monitored. In addition, a reporting system for incidents and malfunctions will be implemented, which users and providers should make use of.

Furthermore, the AI Act requires for high-risk systems: data governance procedures, ongoing technical documentation, system modelling process records, transparency and information obligations for the end user, human oversight obligations, and ensuring accuracy, robustness and cybersecurity. All requirements are described in Articles 8 to 15 of the draft AI law.

Going further, the new proposal envisages that general purpose AI, i.e. models such as GPT-3, DALL:E & Co. that can be used for multiple applications, should be considered high-risk AI systems. This would entail a considerable effort for productive use and would be almost impossible for many use cases.

AI systems with limited risk

All AI systems with specific transparency obligations have limited risk according to the Artificial Intelligence Act. Therefore, they are hardly regulated under the AI Bill. An example of this would be the use of chatbots. Users should be aware that they are communicating with a machine and not a human being.

AI systems with minimal or no risk

The use of artificial intelligence with minimal risk is permitted without restrictions. This includes, for example, spam filters or AI-enabled video games.

Criticism from the Federal AI Association

The EU AI Act is the first attempt at regulation. It is therefore not surprising that the draft still has some weaknesses. Numerous interest groups have already drawn attention to existing shortcomings. In a comprehensive Statement for example, the German AI Association criticised large parts of the draft AI regulation. In general, it sees the Artificial Intelligence Act as an opportunity to make a progressive statement with "AI made in Europe" and to position Europe as an internationally competitive player. Nevertheless, meeting the strict requirements would be an enormous burden, especially for SMEs and start-ups. Such a legislative proposal could even hinder the development of AI in these areas. However, the main point of criticism of the German AI Association is the insufficient definitional clarity. For example, the term artificial intelligence in the text of the law could refer to almost all forms of software. Likewise, the descriptions of the risk categories have been formulated in a partially misleading way.

The German AI Association also proposes a closer look at risk-relevant factors. However, it would be inappropriate to place entire industries and sectors under general suspicion. In order to facilitate compliance with the requirements for high-risk AI systems, specific regulations for SMEs and start-ups would be needed, for example. Usually, these do not have the necessary financial means to ensure complete monitoring and documentation. Best practice examples would also be helpful to make the guidelines laid down in the Artificial Intelligence Act more comprehensible. In fact, the EU AI Act does not provide any idea of how entrepreneurs should implement the legal guidelines in practice.

Conclusion

The 5th version of the EU AI Act is currently being reviewed and discussed by MEPs. On 6 December, the Council of the European Union is to decide on the further course of action within the framework of the Telecommunications meeting. The risk-based draft regulation sets the necessary legal framework for an ethical approach to AI in a digitised Europe. But the Artificial Intelligence Act is rightly causing unease among entrepreneurs and advocacy groups. The main focus of the legal text is on high-risk AI systems, which are not always clearly defined. These are subject to numerous legal requirements, the fulfilment of which is likely to be a difficult task for young and smaller companies. Thus, in its current form, it would slow down innovation in the field of AI by European SMEs and make it more difficult for larger companies to operate productive AI systems. It is therefore to be hoped that individual AI risk areas will be defined more clearly and that the Artificial Intelligence Act will not become a brake on innovation in international comparison.

0 Kommentare