The use of textual explanations for plausibility in Autonomous Driving

Driving" a car that steers itself or sharing the road with autonomous vehicles - what sounds like a dream come true for technology fanatics and visionaries, seems like a real nightmare in the eyes of some conservative users or convinced technology sceptics. Technological progress is pushing the boundaries of our usual lives and changing our reality faster than ever before. That is why the acceptance of new technologies is becoming an ever greater challenge.

Inhaltsverzeichnis

Autonomous systems in safety-critical applications in our life

Concerns of the customer regarding Autonomous Systems, especially in such a safety-critical AI application as vehicle control should be approached with considerable care. A sort of customer support is necessary to enforce new technologies that have a significant impact and are directly affecting our lives in areas where safety and security are crucial. Therefore, AI systems need to be looked at from a user or bystander perspective. Scientists might consider the black-box nature of AI applications a scientific quest, but for users, the public, and policymakers it is a deal-breaking matter in terms of acceptance and adoption or distrust and prohibition.

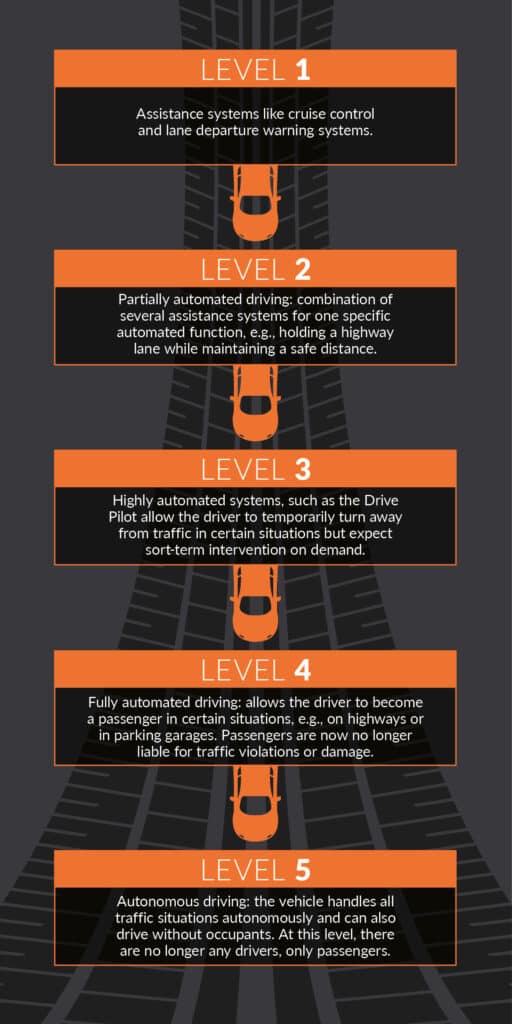

Levels of automation in vehicle control systems

Driving assistants are divided into five levels of autonomy based on their degree of automation. These levels are defined in the J3016 standard of SAE International (German Association of Automotive Engineers).

Handing control over to an automated system, however safe and secure it is claimed to be, is challenging. Remarkably, not fully automated systems like driver assistants or automated parking do not raise any concerns with most users. However, the fully automated ones remain mistrusted.

Problem: distrust in autonomous driving systems by the users and other traffic participants

Trust and acceptance of autonomous vehicles go hand in hand. Without a broader acceptance from the user, the market for self-driving cars is bound to be very limited. Different surveys support this claim. A recent study found out that about 53% of the public feels unsafe sharing the road with self-driving cars and would not be keen on using autonomous vehicles.

Furthermore, it is not only the mistrust of autonomous vehicles that poses a problem, human road users also pose an interesting risk on the road: experiments show that driving behaviour changes when a self-driving car is marked as such in traffic - people then tend to behave more recklessly. Furthermore, pedestrians rely heavily on visual signals from the driver, for example when they want to cross the road. This non-verbal communication is lost in autonomous vehicles, so more specific solutions are already being worked on to enable coordination between autonomous vehicles and pedestrians. A good example is Semcon's 'Smiling Car', which literally smiles at pedestrians.

Starting with the user: human-machine trust

According to Choi and Ji (2015) trust in an autonomous vehicle is rooted in three main aspects.

- The system transparency covers the extent to which the individual can predict and understand the operation of the vehicle.

- The technical competence of the system includes the perception by the human of the vehicle’s performance.

- The third dimension is the possibility of situation management, which is the belief that the user can take control whenever desired.

Increasing trust: better systems and communication with the user

Based on these three aspects several key factors were proposed to positively influence human trust in autonomous vehicles. The most straightforward way to gain more trust is by improving the system’s performance. Mistakes come at a big marketing expense for autonomous cars despite the statistically supported fact, that human drivers cause more accidents.

Another possibility is to increase system transparency. Providing information will help the user understand how the system functions. Hence, the ability to explain the decisions of an autonomous vehicle has a significant impact on users’ trust. This is crucial for the acceptance and following adoption of self-driving cars. To further promote trust in the autonomous vehicle, explanations should be provided before the vehicle acts rather than after, and explanations are especially needed when users’ expectations have been violated, in order to mitigate the damage.

Approaches using textual explanations

As stated above, understanding the system and its working can help people to feel safer around autonomous vehicles. Plausibility for the user in form of textual explanations provided by the system is closely related to the explainability challenge of autonomous systems. Explainability is a challenge for not just the consumers but primarily for engineering and following regulation and market implementation.

Option: Learning captions of a video sequence

The mere description of traffic scenes with an explanation of possible action could be achieved with existing video captioning models, the main limitation being the need for extensively annotated data. Such methods might increase the acceptance of AI technology generally. However, the decisions of a separately trained vehicle controller would remain a black box still.

Post-hoc explanations of the model’s decision

Some existing models can provide justifications for their decisions. Within the model of the vehicle controller, objects or regions within a video frame are determined which are relevant for the controller’s output through attention or activation maps. Based on these relevant visuals a suitable caption is generated. Such post-hoc explanations could increase the users’ trust in the system and could be implemented as live comments on the vehicle’s action as it drives.

Faithfulness vs. plausibility

A challenge of such models providing explanations is the distinction of what kind of explanation the model is in fact providing. User-friendly rationalizing explanations of the output sometimes don’t reflect the actual decision process within the model. In contrast, explanations giving reasoning for the models’ behavior are solely based on the input.

Evaluating explanations constitutes a challenge as well. Automated metric and human evaluations are not satisfying as they cannot guarantee that the explanation is faithful to the model's decision-making process. Human evaluations rather consider the plausibility of the explanation. In order to evaluate faithfulness rather than plausibility, a leakage-adjusted simulatability (LAS) metric was introduced. It is based on the idea that the explanation should be helpful to predict the model's output without leaking direct information about this output.

Problem: Ground truth explanations

Acquiring labeled datasets can be quite difficult: ground-truth explanations are often post-hoc rationales generated by an external observer of the scene and not by the driver who took the action himself. Using these annotations to explain the behavior of a machine learning model is an extrapolation that should be made carefully, however generally in the context of driving it is assumed that the models are made to rely on the same cues as human drivers.

Using textual descriptions for supervision

Another approach is to use textual explanations for the supervision of the vehicle controller. The idea is to have a set of explanations for the vehicle’s actions and action commands themselves. These explanations are then only associated with certain objects that are defined as action-inducing. This implies that only a finite set of explanations is required and that explanations can be viewed as an auxiliary set of semantic classes, to be predicted simultaneously with the actions. This eliminates the ambiguity of textual explanations and improves action prediction performance. Hence, explanations become a secondary source of supervision: by forcing the classifier to predict the action “slow down” because “the traffic light is red,” the multi-task setting exposes the classifier to the causality between the two.

Communication is key

Altogether, explainability and transparency play a crucial role in autonomous systems. On one side developers and engineers profit immensely from the system’s explainability. It is beneficial for technical competence, debugging, and improvement of the model as it offers technical information about current limitations and shortcomings. On the other side, it addresses regulators’ social considerations, liability, and accountability prospects for self-driving cars. And most importantly for the market future of autonomous cars, the trust of the end-user and other traffic participants relies on the transparency of automated systems. To consider and address the aspect of user communication of “smart” autonomous applications and assistants is possibly the winning strategy on the way to acceptance of the new technology.

Zablocki, É., Ben-Younes, H., Pérez, P. & Cord, M. (2021). Explainability of deep vision-based autonomous driving systems: Review and challenges https://arxiv.org/pdf/2101.05307.pdf

Edmonds, E. (2021). Drivers should know if they are sharing the road with self-driving test vehicles https://newsroom.aaa.com/2021/05/drivers-should-know-if-they-are-sharing-the-road-with-self-driving-test-vehicles/

Gouy, M., Wiedemann, K., Stevens, A., Brunett, G. & Reed, N. (2014) Driving next to automated vehicle platoons: How do short time headways influence non-platoon drivers' longitudinal control? Transportation Research Part F: Traffic Psychology and Beahviour, 19, 264-273 https://www.sciencedirect.com/science/article/abs/pii/S1369847814000345

Semcon. Self Driving Car that sees you https://semcon.com/uk/smilingcar/

Kyu Choi, J., Gu Ji, Y. (2015). Investigating the Importance of Trust on Adopting an

Autonomous Vehicle. International Journal of Human-Computer Interaction https://doi.org/10.1080/10447318.2015.1070549

Kim, J., Rohrbach, A., Darell, T., Canny, J. & Akata, Z. (2018). Textual Explanations for Self-Driving Vehicles. https://arxiv.org/pdf/1807.11546.pdf

Hare, P., Zhang, S., Xie, H. & Bansal, M. (2020). Leakage-Adjusted Simulatability: Can Models Generate Non-Trivial

Explanations of Their Behaviour in Natural Language? https://arxiv.org/pdf/2010.04119.pdf

Xu, Y., Yang, X., Gong, L., Lin, H., Wu, T., Li, Y., Vasconcelos, N., (2020). Explainable Object-induced Action Decision for Autonomous Vehicles https://arxiv.org/pdf/2003.09405.pdf

0 Kommentare