An Intro to RAG

From Hallucinating AI to Grounded Output

- Published:

- Author: Linus Zarse

- Category: Deep Dive

Table of Contents

“Sounds plausible – but it’s wrong.”

This is what many users frequently conclude when relying on answers from Large Language Models (LLMs). While models like ChatGPT, Claude, or LLaMA can produce impressively fluent text, they do so by drawing on patterns learned during training, rather than by accessing current or verifiable information. As a result, they quickly reach their limits when dealing with complex or fast-moving domains such as law, technology, or internal company knowledge. When the right data is not part of the training set, these models are prone to so-called “hallucinations” and may produce outdated or outright incorrect responses 1.

An example:

If you ask a model, “How many internal projects do we have for client XY?” or “How do I assemble the cabinet from model ABC?”, it typically won’t know the answer, because that kind of information is stored in internal systems like Confluence, PDF manuals, or MS Teams.

This is where RAG comes in. RAG offers a smart solution by combining generative language models with external, retrievable knowledge. Instead of relying solely on what the model has learned during training, it actively searches for relevant information in documents, databases, or knowledge platforms – and incorporates that information directly into the response.

RAG serves as a bridge between pre-trained language capabilities and specifically integrated external context. This makes it especially well-suited for use cases that demand high precision and traceability. The combination of retrieval and generation is increasingly proving effective in chatbots and internal knowledge systems, particularly when it comes to producing reliable, verifiable content2.

What is Retrieval-Augmented Generation (RAG)?

RAG is an architectural style in which Large Language Models (LLMs) are combined with an upstream retrieval module to incorporate external knowledge for each query3.

An intact RAG system usually consists of the following technical components:

- a Large Language Model (LLM) for generative response formulation,

- an embedding model for converting texts into semantic vectors,

- a vector database (e.g. FAISS, Weaviate) for efficient similarity search,

- and a retriever logic that retrieves relevant content from the database for each query.

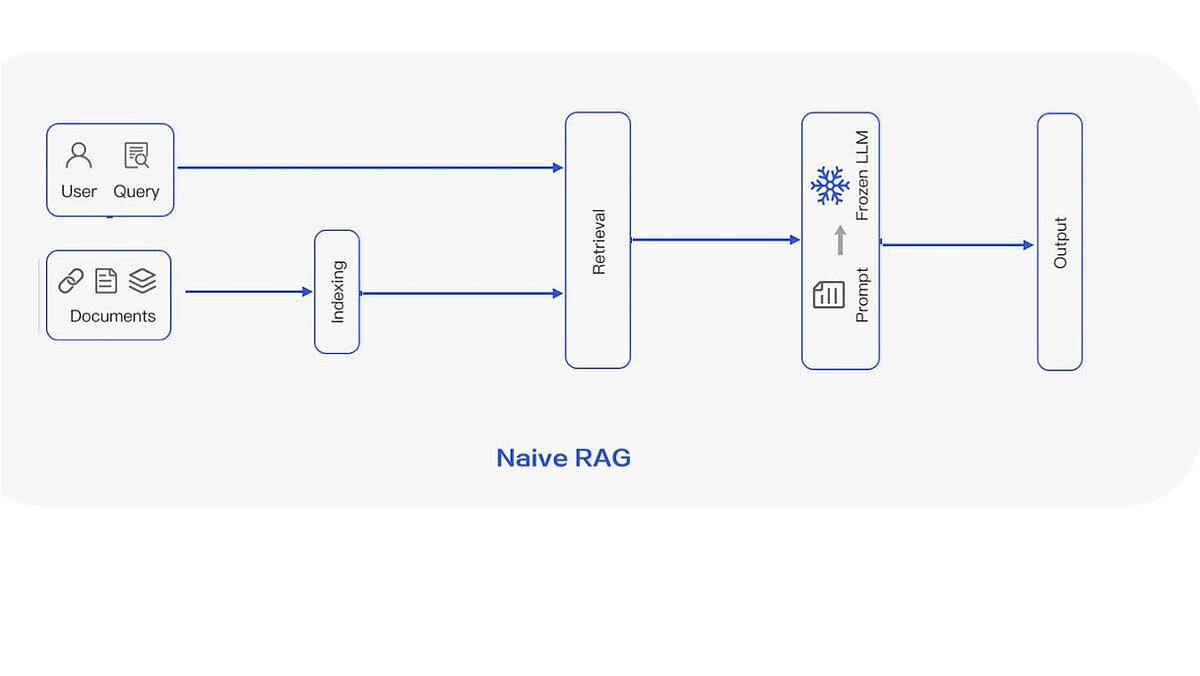

This combination is particularly widespread in the so-called Naive RAG paradigm4. This is a classic three-stage “retrieve-read” approach consisting of three main functional components:

Indexing: Preprocessing and vectorization

The first step consists of extracting and cleansing a wide variety of data sources such as PDFs, HTML pages or internal Word documents. This content is then converted into a standardized text format and segmented into smaller chunks, as language models can only process a limited number of tokens.

Each of these text segments is then converted into a vector using an embedding model (e.g. Sentence Transformers or OpenAI Embeddings) and stored in a vector database (e.g. FAISS, Weaviate or Chroma). This represents the knowledge base for the system from which information is extracted.

Retrieval of Relevant Content

When a user submits a query, it is also transformed into a vector. The retrieval component then compares this query vector with those stored in the database, typically using similarity measures such as cosine similarity, and selects the top-k most relevant text chunks. In other words, the system retrieves the information that is most semantically aligned with the query.

This step is critical to the quality of the final response. If irrelevant content is retrieved or key information is missed, the precision of the generated answer suffers, resulting in incomplete or even incorrect outputs.

Generating a Response

The selected chunks are combined with the original user request to form a prompt and passed to a (usually "frozen") large language model, which generates a natural response. Depending on the application, the model can either be freely formulated or explicitly linked to the context provided.

A typical example of the prompt structure is as follows:

Practical Applications of RAG

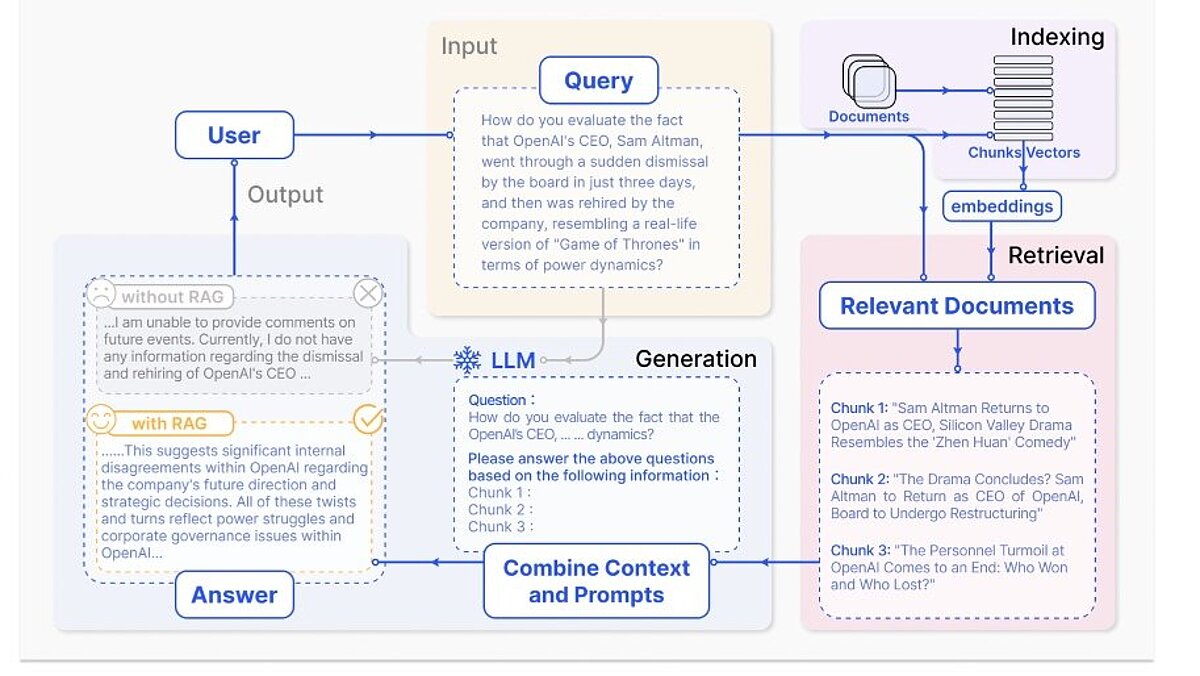

Once the basic architecture is understood, the next question is: what does a RAG system look like in practice?

The following illustration outlines a typical RAG pipeline in a question-answering scenario. It can be broken down into six functional stages that together form a continuous flow of information, from the initial user input to the final generated response5:

Step 1: User Request (Query Initiation)

The RAG process starts with a user input in natural language, typically in the form of a specific question. This forms the semantic basis for all subsequent processing steps.

Step 2: Indexing

To make relevant information discoverable, external knowledge sources, such as internal documents, PDFs, or websites, are broken down into smaller, semantically meaningful text segments (known as “chunks”). These chunks are then transformed into vectors using an embedding model and stored in a vector database. The result is a searchable index that enables efficient retrieval later on.

Step 3: Semantic Search (Retrieval)

Once a request is submitted, it is converted into a vector. This vector is then compared to the vectorized chunks in the index using a similarity measure, typically cosine similarity. The top-k chunks with the highest semantic similarity are selected and passed on for further processing.

Step 4: Contextual Prompt Construction

The retrieved text fragments are then combined with the original query to create an augmented prompt. This enriched prompt adds relevant contextual knowledge to the input and serves as the foundation for the model’s response, without requiring the LLM to store or internalize any new information.

Step 5: Response generation (Controlled Natural Language Generation)

The LLM processes the augmented prompt and generates a response. Depending on the setup, it can rely solely on the provided context (context-dependent) or combine it with its internal knowledge (parametrically extended). The output is generated sequentially and is designed to align closely with the integrated information.

Step 6: Output and feedback (Answer Delivery & Feedback Loop)

The answer is returned to the user.

In more advanced architectures, a feedback loop can be integrated to evaluate responses and refine the indexing or retrieval strategy, using mechanisms such as user ratings, confidence scoring, or ranking adjustments.

Advantages and Limitations of RAG

The key advantage of a RAG system lies in its flexibility: the underlying language model is not confined to static, pre-trained knowledge but can be continuously enriched with external information. New content, for example from internal documents or databases, can be integrated directly, without the need to retrain the model itself. This allows responses to be easily adapted to evolving knowledge or specific organizational needs, making RAG particularly well-suited for use cases like customer support, technical documentation, or knowledge management.

Another key advantage of RAG is the traceability and transparency of the generated content. This feature is particularly essential in highly regulated industries such as finance and insurance, where decisions must be documented, justified and disclosed in the context of audits.

RAG systems make it possible to explicitly link the answer to the underlying sources, for example by specifying the document chunks used or by linking to the original source. This creates a verifiable basis for decision-making that is not only comprehensible for users but can also be audited and versioned by the system.

This also enables organizations to meet regulatory requirements such as MaRisk, VAIT, or the EU AI Act. At the same time, RAG enhances trust in the system and provides a solid foundation for compliance, internal controls, and audit-proof documentation.

Despite these advantages, RAG comes with its own challenges. A significant challenge regards the quality of the retrieval. If irrelevant or incomplete chunks are retrieved, the response quality suffers significantly. The prompt design is also crucial, as the context must be meaningfully integrated so that the model can interpret the query correctly6.

Further risks arise from redundancies or contradictions in the retrieved context, which can affect the consistency of the output. RAG also requires additional resources, as the creation of embeddings and the semantic search in the vector database cause a noticeable memory and computing load, especially in real-time scenarios7.

Advanced RAG Approaches

To overcome these limitations, several advanced RAG approaches have been developed in recent years. These include multi-hop RAG, in which complex questions are answered through multi-stage, interdependent retrieval.

Reranking methods also evaluate the chunks found according to relevance, coherence and redundancy before they are incorporated into the prompt8.

ReAct and Toolformer extend the classic RAG pipeline by integrating reasoning capabilities and external tools such as web search or calculation functions. Further, Conversational RAG incorporates the course of the dialog and thus ensures consistent answers in longer interactions.

Graph RAG, an approach that not only considers linear document structures, but is explicitly based on knowledge graphs, is particularly noteworthy. Here, information is modeled as nodes and edges in a semantic network, which enables structured, more context-aware exploration and linking of content, especially in the case of complex relations between entities or concepts.

In addition, RAG systems can be further improved and adapted to specific applications using the following components:

- Query rewriters that automatically specify unspecific or ambiguous user queries before retrieval.

- Prompt optimizers that structure or compress retrieved chunks in order to use them more efficiently in the prompt.

- Answer validator, which checks after generation whether the answer is covered by the context (e.g. with a second LLM).

- Dialog memory modules that save the conversation history and enable a consistent conversation over several rounds.

- Agentic RAG, in which specialized agents (e.g. retrievers, reasoners, critics) work collaboratively on the optimal answer.

Many of these extensions are either still under research or only available in specialized applications. However, they pursue the same goal – namely, to overcome the limitations of Naive RAG and enable more precise and context-related answers9.

Conclusion

Retrieval-Augmented Generation combines the expressive power of Large Language Models with the precision and timeliness of external knowledge sources. In domain-specific applications, RAG provides comprehensible and updatable answers without having to retrain the model.

A simple retrieve-and-generate method is often no longer sufficient. Advanced approaches such as multi-hop RAG, graph RAG or multi-agent architectures improve the quality of retrieval, increase consistency and deepen the contextual reference.

RAG is thus becoming a central component of modern AI systems, especially where the focus is on reliability, explainability and company-specific knowledge.

Share this post: