Understanding the Architecture of Agentic AI

The architecture behind autonomous systems

- Published:

- Author: Jonas Kromolan

- Category: Deep Dive

Table of Contents

The rapid development of artificial intelligence (AI) - particularly in the areas of generative AI (GenAI) and Large Language Models (LLMs) - is paving the way for ever more powerful Agentic Architectures. One particularly exciting advance is the integration of LLMs into Agent-based AI systems that can handle complex tasks more efficiently - for example in automated customer advice, project management or strategic decision-making.

Picture a company that uses a virtual project manager - an AI Agent that not only plans tasks but also works with specialised agents for technology, marketing and finance. While the project manager maintains an overview, the technical Agent identifies potential challenges, the marketing Agent develops and plans an appropriate product strategy, and the finance Agent keeps an eye on the budget. The entire system operates autonomously and seamlessly - thanks to LLM-based communication between the agents.

In this blog post, we take a deep dive into the architecture of LLM-based agents, exploring what sets them apart, how they are structured, and the core principles behind their functionality. Additionally, we highlight the leading frameworks and tools that facilitate the development of these advanced systems.

What are AI Agents, and what makes them special?

An AI Agent is an autonomous system designed to operate within a specific environment to achieve defined goals. It perceives, processes, and responds to its surroundings, executing targeted actions to complete its tasks. With the integration of LLMs, these agents go beyond simply processing data—they can understand and generate natural language, significantly expanding their range of applications. They combine efficient problem-solving with a nuanced understanding of complex inputs, functioning much like a microservice – but far more intelligent and adaptable.

Applications of LLM-based AI Agents

LLM-based Agentic systems are used in various areas. The following are particularly popular:

Software Development

LLM-based agent systems can be used in software development to solve complex problems and improve collaboration between different software modules.

Simulations

With the ability to understand and generate natural language, LLM-based agents can be used in simulations to emulate human behaviour or model complex scenarios.

Autonomous systems

In autonomous systems, such as customer support or autonomous vehicles, LLM-based agents can be used to support complex decision-making processes and improve interaction with humans.

What are the Benefits of AI Agents?

The use of Agentic AI in various business areas offers numerous advantages – from increased productivity and cost reduction to the automation of complex processes. Three key benefits are:

Increased productivity

Thanks to their high degree of autonomy, AI Agents can carry out the tasks assigned to them without human interaction. They can make decisions based on the data available to them with unrivalled efficiency, using tools and models to help them find the optimal solution.

Reduced costs

Automation reduces costs. This also applies to the use of AI Agents. In an Agentic project in the automotive industry, we implemented a system to automate fault analysis. The system identifies root causes in around 30 minutes and improves collaboration between quality management, production and workshops. The agents can initiate corrective measures independently. This system can save up to 13 million euros yearly in warranty costs.

Automation of complex processes

Generative AI Agents can not only solve repetitive tasks or make isolated decisions. Assembled in an Agentic system, these Agents can work together to autonomously implement highly complex tasks and processes. In customer service, one Agent would take over communication with customers, either via chat or voice. Another analyzes and classifies the customer's concerns and forwards them to the appropriate departments. Standard tasks such as processing returns would then be carried out fully automatically by specialised Agents. Finally, the automated responses are checked for plausibility and accuracy by another Agent to minimise the error rate. For 2nd or 3rd level support or other issues that cannot be automated, people can also be involved in solving or checking and validating issues as part of such a system (human in the loop).

Key Frameworks in Agentic Architectures

The architecture of LLM-based Agentic systems comprises several central concepts:

Perception: Agents collect data from their environment, which can be both structured and unstructured, including natural language. Relevant data sources are always dependent on the use case. Here are a few examples:

- Text-based sources: Documents, emails, chat messages, ticket systems or FAQs.

- Visual sources: Images, videos, surveillance footage or screenshots.

- Sensor-based sources: IoT devices, temperature, motion or environmental sensors.

- System logs and metrics: Logs from software applications, server data or performance monitoring tools.

- Databases: Structured data from CRM, ERP or other business systems.

- Web and API sources: Websites, social media platforms, news feeds or industry-specific APIs.

Cognition/Reasoning: The integration of LLMs enables agents to perform complex language processing, understand context and make informed decisions.

Action: Based on the decision made through cognitive processing, agents perform actions that can range from physical movements to generating text or code.

Different Types of Agentic Architecture

The following section explores the technical approaches for implementing different Agentic architectures. To make it easier to compare differences, strengths and weaknesses, all approaches are outlined using an example for generating a specialist journal article. The following agents are essential for implementation in an agent system:

Headline Agent: An agent who specialises in creating short, concise and marketable headlines.

Scientific Agent: This agent generates the content of the article. In addition to the world knowledge of the language model, this agent has access to numerous scientific sources and databases.

Visual Agent: As a good article also benefits from one or two visualisations, this agent creates precisely these.

Together, these agents can generate a complete article—including a headline, content, and relevant graphics – using various approaches. Below, we explore the key factors that determine their success.

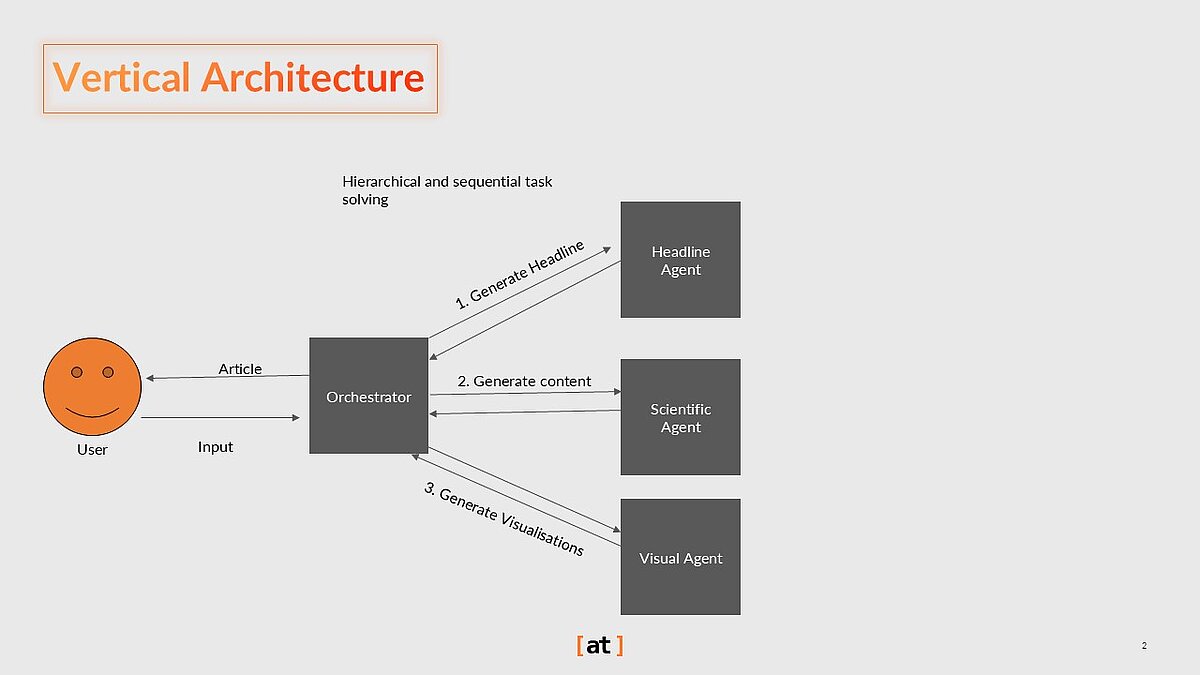

Vertical Architecture

This approach is based on a strict, hierarchical and centralised concept. At the top is a central, orchestrating Agent that controls all tasks and decisions. All other Agents and systems in this construct have clearly defined tasks and are subordinate to the orchestrator. All communication within the system takes place via the orchestrator; the other Agents act in isolation from each other.

For our example, this means that the user input goes directly to the orchestrating Agent. It then creates tasks for the individual agents from the input and distributes them. After completion, the orchestrator evaluates the results and combines them into the final output and returns this to the user.

This approach is highly efficient for implementing clearly defined, ideally sequential tasks. The flow of information is transparent, and the orchestrator retains the final decision-making authority. This type of architecture is particularly well suited for executing structured processes based on a predefined set of rules. On one hand, this provides the advantage of a single point of control; on the other hand, it also introduces a single point of failure. With larger and more complex requests, the orchestrator can quickly become a bottleneck, slowing down the entire process. In the worst case, issues within this central agent could result in incorrect outcomes.

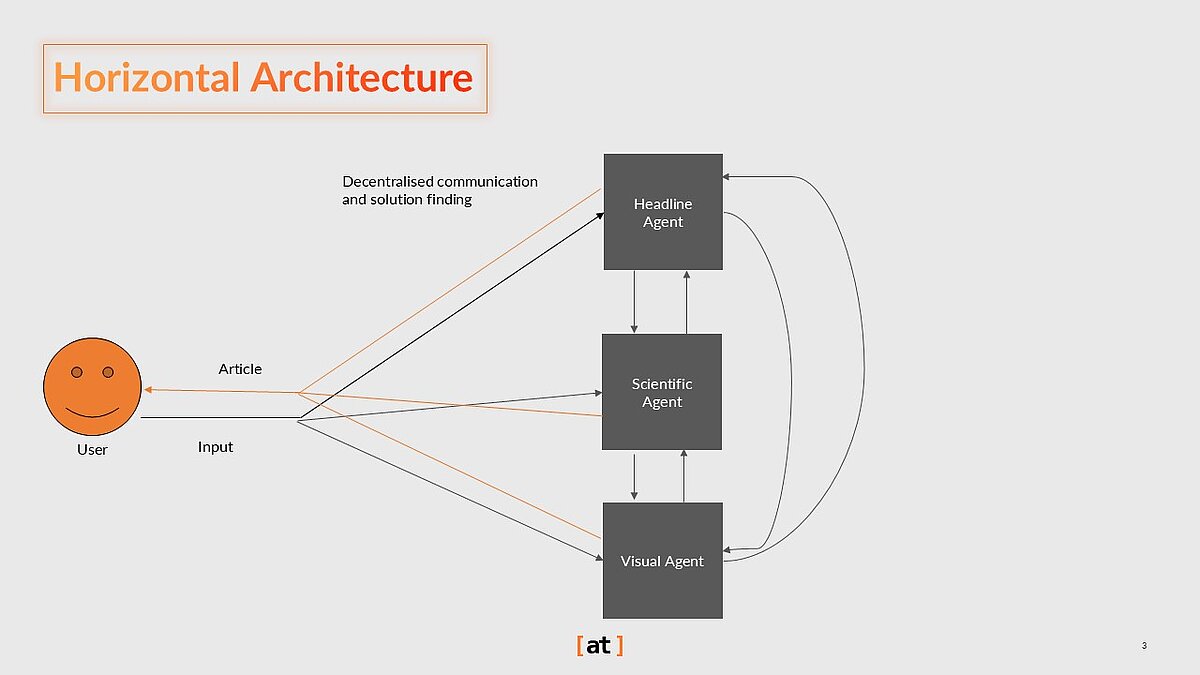

Horizontal Architecture

The horizontal architecture approach is the counterpart to the vertical architecture. In this model, there is no central orchestrator. Instead, all Agents collaborate as equals within a decentralized system. The goal is to efficiently solve tasks through cooperative and collaborative interaction. Internal communication flows between Agents, ensuring they share the same information or context. Decisions are made collectively, with participating Agents signaling the completion of their respective tasks.

In this example, our AI writing workshop particularly benefits from the high level of autonomy in the implementation. All Agents start interpreting the task in parallel and begin realising it autonomously. This example system is structured in such a way that the Agents harmonize their goals and results so that the title, content and graphics also fit together in terms of content.

The strength of this architecture lies primarily in its flexibility and dynamics. With autonomous Agents, a wide range of tasks can be handled with minimal predefined instructions. For example, complex, interdisciplinary problems can be efficiently analyzed and processed, with each Agent acting as a subject-matter expert and the system operating as a "mixture of experts." This approach is equally well suited for creative processes like brainstorming, where diverse perspectives come together to generate new ideas.

However, the freedom of this architecture also brings decisive disadvantages. For example, the coordination of many Agents in a system can quickly become a challenge. Without a coordinating instance, the system can quickly become inefficient due to repetitive decision-making and communication loops. In general, horizontal AI architectures are significantly slower than their vertical counterparts. Democratic decisions require time and space for discussion. While this is essential in the analogue world, AI Agents do not necessarily have to discuss every problem in endless loops.

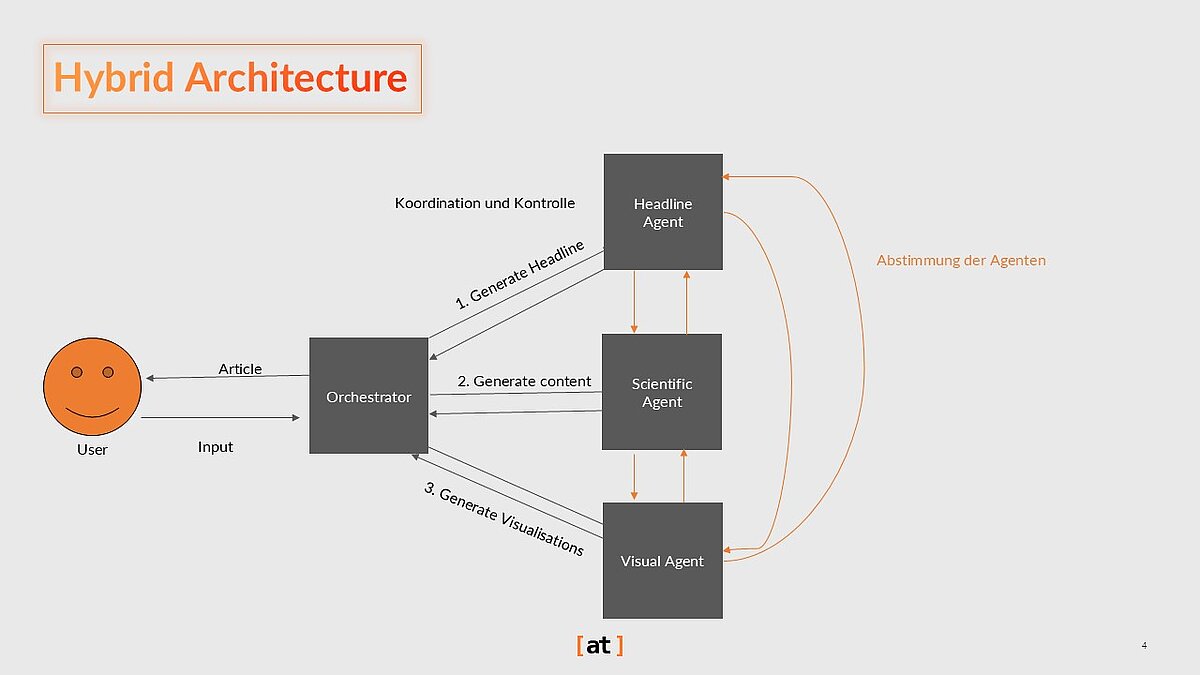

Hybrid Architecture

As in any good design concept, there is a hybrid approach to Agentic systems that combines the strengths of individual approaches.

This approach can best be imagined as the virtualization of a team. There is a team lead (orchestrator) who bears responsibility and makes important decisions. At the same time, the team members (Agents) have the freedom to solve their tasks individually and work together as required.

In this example, the orchestrator interprets the task as it would in a vertical architecture and assigns it to the individual Agents. However, during execution, the Agents work in parallel and coordinate among themselves to achieve the goal. Here, the orchestrator does not serve as the sole controlling and decision-making authority but can intervene when necessary. For instance, if two Agents disagree and cannot reach a solution, the orchestrator steps in to resolve the conflict and makes a decision.

The strengths of this architecture are clear. By combining the dynamics and flexibility of the horizontal approach with a focused, efficiency-driven control instance, the system can adaptively solve highly complex problems that require bothstructure and creativity. However, implementing such a system is challenging due to its high complexity. Additionally, operating this type of platform demands significantly more resources compared to individual approaches.

Tools and Frameworks

The development of LLM-based Agentic systems is supported by various tools and frameworks:

AutoGen

An open-source framework that allows developers to create LLM applications via multiple Agents that communicate with each other to perform tasks. AutoGen Agents are customisable, can hold conversations and operate in different modes that include combinations of LLMs, human input and tools.

LangGraph

A graph-based framework that represents workflows as graphs and enables the visualisation and management of complex interactions between Agents. It provides comprehensive storage capabilities for short and long-term memory and is particularly useful for dynamic workflows with fine-grained controllability.

CrewAI

Relies on a role-based organisation with a high level of abstraction. Each ‘crew’ consists of specialised Agents with specific roles and goals. It offers a comprehensive storage system and enables the design of complex workflows through event-driven interactions between different crews.

Conclusion and Outlook

LLM-based Agentic systems represent a significant advance in AI research and application. By combining the language processing capabilities of LLMs with the collaborative properties of Agentic systems, complex tasks can be solved more efficiently and effectively.

Future developments could lead to even more intelligent and adaptable Agents that are can operate effectively in even more complex and dynamic environments.

Our customers and numerous end users are already benefiting from the great potential of Agentic AI:

- We developed an agent-based app for Energie AG Oberösterreich that promotes sustainable habits in users' daily lives.

- Discover more real-world applications of Agentic AI in our “The State of AI 2025” report.

Share this post: