Agentic RAG

Making RAG Systems More Autonomous with Agentic AI

- Published:

- Author: Dr. Tore Erdmann, Dr. Philipp Schwartenbeck

- Category: Deep Dive

Table of Contents

For a company, being competitive nowadays means to innovate. Artificial Intelligence (AI) is enabling this at unprecedented speed. Of particular importance is the emergence of Agentic AI, where AI Agents solve tasks and complete workflows with a high degree of automation. For an introduction to Agentic AI, check out related blog articles, like Putting your Data to Work – with Agentic AI or Understanding the Architecture of Agentic AI.

For example, companies are starting to make productive use of AI Agents to chat with their tabular data. In this blog post, we will introduce a related approach, namely Agentic Retrieval Augmented Generation (RAG), that is helping companies make the most of all their data in textual form.

Upon the release of ChatGPT we were all amazed at what you could do with a huge neural network, trained on tons of textual data. The technical basis of this advancement was one of scale, and the fundamental idea is simple: More data means better models. During the training process, neural network’s parameters are adjusted until the model can predict the next word in a sentence. Once you have trained such a model, you can generate texts by repeatedly predicting the next word of the text token-by-token (a token is basically ¾ of word, which has proven a better basis). This generative process is what underlies all Large Language Models (LLMs).

Soon after they broke onto the scene, however, users started feeling the constraints of LLMs – most notably, their knowledge cut-off. This refers to the date up until which the data used to train the model is current. As described above, a model’s parameters are only adjusted during the training phase, not during inference. Since the model's knowledge is encoded in these parameters, it cannot learn or incorporate new information after training. This limitation becomes increasingly problematic over time, especially as more recent or time-sensitive information becomes necessary for accurate and relevant responses.

As the world changes and new information emerges daily, connecting the models to up-to-date data sources becomes not just beneficial but essential for maintaining their utility and trustworthiness. Note that the same principle applies in situations where we want to augment the model with knowledge that is not publicly available, such as classified company-specific information.

Another notable limitation was the tendency of LLMs to hallucinate. Given their generative nature, the main objective of LLMs is to generate reasonable text, but not necessarily factual or correct text. Especially in the early days it was obvious that chatbots like ChatGPT could easily be tricked into talking nonsense. Thus, to use those models productively, it was essential to “help” the model to respond in ways that are factually grounded, by providing relevant information through the prompt, i.e. providing more context.

So, what can we do to mitigate those limitations? Generally, there are two ways to change what the model “knows”. Firstly, you can “fine-tune", that is, change the parameters of the “pre-trained” LLM through additional training on a smaller dataset that is specific to the application. Re-training from scratch is out of the question: due to the huge number of parameters and the ensuing memory requirements, training requires specialized computing clusters, which few can afford.

Secondly, you can simply provide relevant information to the LLM by augmenting the prompt by adding textual data to the user's query before sending it off to the LLM. This generally requires less effort than fine-tuning and can be done in an automated way, which is what underlies Retrieval Augmented Generation (RAG).

What is Retrieval Augmented Generation (RAG)?

Consider a company offering a personalized assistant to their employees that should respond to the user in ways that depend on their role. Re-training or fine-tuning an LLM for every user would be an enormous waste of resources, when you could simply, for each user, prepend their question to the model with the user’s role. Such an augmented prompt may look something like this:

This simple approach – augmenting the prompt to the LLM with information that may be useful to the following query – is what underlies retrieval-augmented generation (RAG). RAG systems pair LLMs with external knowledge retrieval mechanisms. When a query is received, the system first retrieves relevant information from up-to-date sources, then uses this information to augment the prompt to the LLM that is generating a response.

The same approach can be used when an LLM response should be enriched by company-specific knowledge, rather than general knowledge based on the model training. If I ask the model about a company-specific process, its answer will be much better if I augment the LLM’s generation process by company-specific information (like an internal documentation or publication), rather than simply let it answer generically. This principle will also avoid unwanted hallucinations, by providing additional facts that guide the answer generation.

Figure 1 illustrates the functioning of a basic RAG system, consisting of the following steps:

- Query Processing: The user's question is analyzed and converted into an appropriate search query.

- Information Retrieval: The system searches through connected knowledge bases and document stores to find relevant information. Note that these knowledge bases, often in the form of vector databases, must be built up in the first place.

- Context Formation: Retrieved information is selected, filtered, and formatted to provide relevant context. The information is combined with the user query into a "prompt".

- Response Generation: The LLM generates a response based on the prompt, consisting of the user query and retrieved information.

![Vanilla RAG Vanilla RAG. Figure credit (original version): Dr Maximilian Pensel, [at].](/fileadmin/_processed_/a/e/csm_fig1-vanilla-rag_626cc95946.png)

In a basic Retrieval Augmented Generation (RAG) setting, a user query (question) is augmented by retrieved document parts. These parts are found based on similarity search in an embedding space, i.e. a mathematical representation of text in the form of large vectors. Document parts that resemble the user query the most are identified, ranked according to their similarity, and passed on as additional context to the user query. The query and retrieved information are then passed on together to a Large Language Model (LLM), which generates an answer to the user query based on the retrieved information.

Classically, the knowledge base might be a set of text files or PDFs that have been turned into text files. This collection can be continuously updated by removing documents or adding new ones to the index. This approach has proven remarkably effective, allowing LLMs to access current and/or company-internal information, provide citations, and ground responses in specific documents or databases. RAG systems have found applications in customer service, where they can access company-specific knowledge bases; in healthcare, where they can reference the latest medical literature; and in legal contexts, where they can incorporate current case law and regulations.

Given its simplicity and effectiveness, it is no surprise that the early days of LLM applications have been dominated by RAG use cases, which continue to be highly relevant to this day.

Limitations of RAG Systems

Despite their proven effectiveness, RAG systems come with their own limitations While augmenting the prompt with useful information, they do not modify the user query. This can lead to very different results for only small changes to the input query.

- Embedding-based retrieval isn't always accurate when it comes to meaning. It can sometimes return irrelevant documents just because they contain similar words, even if the overall context is different.

- Since they follow a fixed workflow, RAG systems are inadequate for complex questions that may need multiple steps of retrieval.

- They do allow to double-check their results and thus cannot control the quality, accuracy and relevance of their responses.

These limitations have highlighted the need to develop more clever, autonomous and flexible RAG systems, and have wedded the RAG approach with the recent advent of Agentic AI.

Enter Agentic Retrieval Augmented Generation

If 2024 was the year of RAG applications, then 2025 is the year of Agentic AI. Agentic AI brings LLM applications to a new level: LLM-powered Agents can reason about tasks and work in concert. They can have specific roles (like a database retriever, an input reformulator, a web searcher or a text generation checker) and use tools, such as document processing, coding, or web search. This allows us to create incredibly powerful workflows that promise a much higher degree of automation and flexibility than classical LLM chatbots. A detailed discussion of Agentic AI and its potential goes beyond the scope of this blog, but you can check this Introduction to Multi-Agent Systems and this blog post about AI Agents for a more detailed discussion of Agentic AI and its use in business problems.

While RAG systems were a significant advancement from Vanilla LLMs, they are still highly constrained. They consist of a fixed sequence of steps and are usually optimized for a single type of question or knowledge base.

Agentic RAG augments RAG by different Agents andgoes beyond this straightforward retrieval process into an active, goal-oriented system capable of executing a variety of actions to fulfill user requests. Here are some ways that Agentic RAG uses Agents:

- To flexibly decide how to retrieve information (what information to retrieve and where to retrieve it from),

- to reformulate user queries to standardize and or classify them and route them to specialized workflows,

- call specialized tools, or

- to perform in-process evaluations which ensure quality.

Instead of being the primary operation, retrieval of information from specific sources is just one of many possible actions that Agentic systems can perform. Actions can be orchestrated to solve complex problems, and multiple Agents can iterate on a problem to produce better responses. Together, this generalization (from RAG to Agentic RAG) considerably increases the controllability, flexibility and usefulness of the system. We discuss various examples and variants of Agentic RAG in the next section.

Variants of Agentic RAG

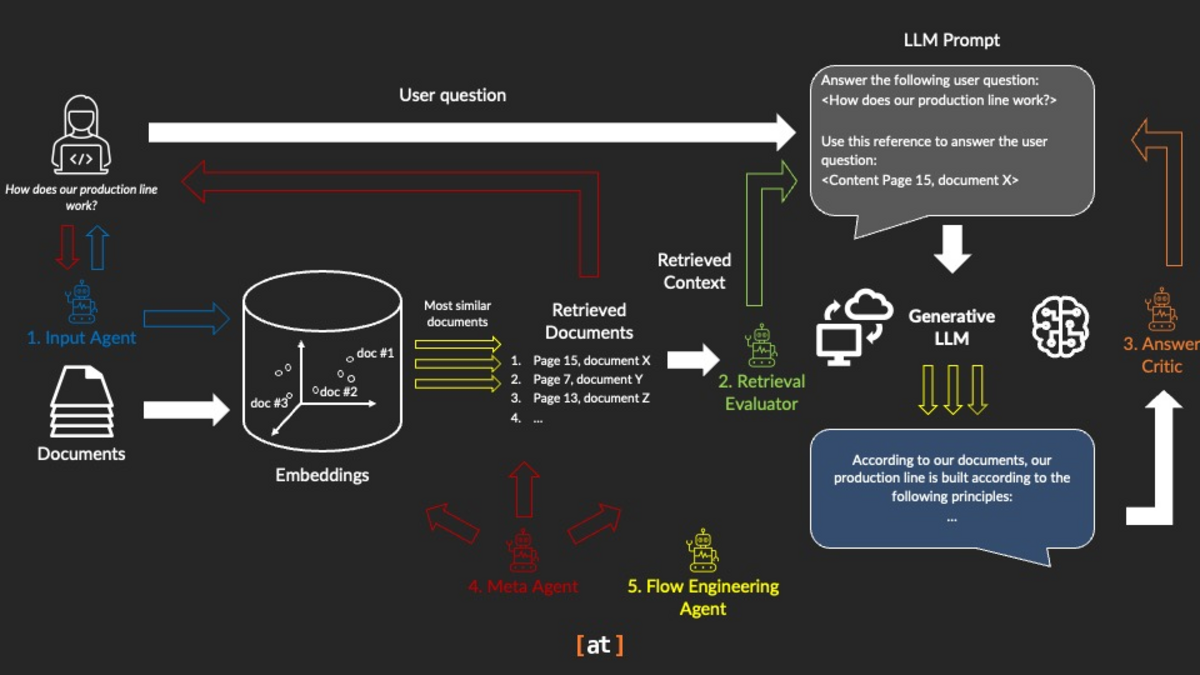

The core idea of Agentic RAG is grounding Large Language Models in specific knowledge sources via document retrieval and allowing the models to perform actions through tool-calling. In this section, we explore several architectural patterns - “variants” of Agentic RAG - that illustrate how this grounding can become Agentic, enabling dynamic decision-making, self-evaluation, and human-in-the-loop architectures. Figure 2 provides an overview over how Agentic AI can augment and enrich RAG systems.

In Agentic RAG, the basic RAG workflow can be augmented by AI Agents in various ways. An Input Agent can decide whether a user query can be answered by documents in a database, can be answered directly without the need for retrieval, or should be escalated, which database (or alternative source) to choose and whether to reformulate a query. A retrieval evaluator Agent can re-rank retrieved documents, filter-out low-quality documents or further improve the retrieval by adjusting parameters of the retrieval. An answer critic can evaluate the quality and relevance of the generated answer and adjust the prompt if required. A Meta-Agent can feedback results from the retrieval to a human user, add memory to the user input and orchestrate the other Agents. Finally, a flow engineering Agent can parallelize processes and implement ensemble voting for several generated LLM responses.

Input Agent: Managing Retrieval Decisions

Any Agentic RAG system begins with an Input Agent, a router that determines if and how to retrieve additional context. For example:

- When to RAG or Human Operator: upon receiving a customer support query, the Input Agent decides whether to answer directly from its own knowledge, fetch relevant documents, or hand over to a human operator.

- Source Selection: it chooses the appropriate database or collection - say, product manuals, policy documents, or domain-specific wikis - or even whether to query an internal store or perform a live web search.

- Query Reformulation: before retrieval, the prompt can get refined. Ambiguous or underspecified requests like “What’s the refund policy?” become “What is the 30-day return and refund policy for electronics purchases?”

By decomposing a complex goal into subtasks (“find applicable policy,” “locate customer’s purchase date,” “compute refund eligibility”), the Input Agent retrieves context stepwise and chains sub-outputs into a coherent overall plan. It can also expose its own chain-of-thought or intermediate reasoning steps, recognizing dead-ends and automatically exploring alternative retrieval strategies.

Retrieval Evaluator: Ensuring Quality and Relevance

After fetching candidate documents, an Agentic RAG system often implements a Retrieval Evaluator:

- Relevance Testing and Re-ranking: each retrieved document is scored according to certain a metric, such as relevance (or metric that can be enforced via code or another LLM) and reordered to improve upon the imperfect embedding-based retrieval.

- Dynamic Retrieval through Tool Outputs: suppose a tool fetches today’s exchange rate. The Retrieval Evaluator can re-retrieve related log entries or documentation - “How have rates fluctuated this week?” - to interpret the fresh data and decide subsequent steps.

- Adaptive Query Rewriting: if the initial search yielded low-confidence hits (“I didn’t find enough on vendor compliance”), the evaluator can automatically rewrite the retrieval query (“search for ‘vendor certification audit procedures’ instead”) and issues a follow-up.

In such implementations, the system can enter a closed loop: retrieved chunks feed back into new retrievals until a confidence threshold is met.

Answer Generation Critic: Iterative Refinement

Before presenting an answer, Agentic RAG can apply a Critic Agent that invokes the LLM a second time, checking for hallucinations, logical inconsistencies, or style compliance, and re-runs generation if necessary. For mission-critical tasks (financial summaries, medical advice), multiple such safeguards can be combined to ensure that the final output meets stringent quality standards. Importantly, this ‘LLM as a judge’ approach allows testing whether generated content meets the intent of the user request and, if not, re-run the retrieval and/or answer generation.

Meta-Controller: The Agent Manager

A Meta-Controller or “Agent Manager” can be employed to enforce high-level policies:

- Action Orchestration: Decide when to trigger retrieval, call external tools (e.g., statistical calculators, graph analyzers), or query the user for additional input.

- Resource and Safety Monitoring: Track API usage, rate limits, and ensures compliance with organizational policies, interrupting or rolling back actions that could lead to data leaks or unethical outputs.

- User-in-the-Loop Prompts: When ambiguity remains, the Meta-Controller can pause for clarification by the human user - “Do you want me to include PDF attachments in the summary?” - thereby balancing autonomy and human oversight.

- Long-Term Memory: the Agent retains user-specific facts (preferences, project milestones, evolving world states). By recalling past interactions, it avoids repeated computations (“You asked about EBITDA last month—should I fetch the latest quarterly report?”) and personalizes its responses.

All decisions, from retrieval requests to tool invocations, are logged in an explainability trail and Agentic trace, providing users with a transparent record of each intermediate step.

Flow Engineering Agent

Finally, Agentic RAG systems can also employ parallelization and ensemble methods to improve performance:

- Task Parallelism: independent subtasks like extracting Table A, Table B, and Table C from a technical PDF, are processed in parallel, with results aggregated into a unified summary.

- Ensemble Voting: the same retrieval-generation pipeline runs multiple times under different prompt or the LLM’s temperature set to allow stochasticity in the output, and a majority-vote mechanism selects the most consistent answer.

Redundancy mitigates single-run failures, reduces hallucinations, and often yields more reliable, consensus-driven outputs. This can often be at the expense of higher response latency, unless processes can be parallelized efficiently.

By combining these variants - modular input Agents, evaluators, critics, meta-controllers, memory, tool integration, and parallel ensembles - Agentic RAG systems extend conventional RAG. They become dynamic, flexible, self-correcting, and more transparent AI assistants, capable of solving complex, domain-specific tasks while keeping humans squarely in the loop.

Limitations and Alternative Approaches

While Agentic RAG unlocks powerful, context-aware language capabilities, it is not without trade-offs. Here, we outline key limitations and point to alternative methods that partly circumvent these limitations but are far from perfect themselves.

Limitations

Resource and Latency Overhead

Retrieval-augmented generation already incurs a modest delay - on the order of 200 ms per query - due to embedding lookups and external datastore calls. Introducing multiple agents compounds this significantly: each submodule (retriever, planner, executor, critic) adds processing time, and chaining agents sequentially can multiply end-to-end latency. For latency-sensitive applications (e.g., live chat or edge deployments), these cumulative delays can be problematic.

System Complexity and Maintainability

An Agentic RAG pipeline is inherently more intricate than a vanilla RAG system. Beyond retrieval and generation, you must orchestrate agent selection, tool invocation, and meta-controller policies. This proliferation of moving parts makes debugging performance bottlenecks or tracing erroneous outputs more challenging. Moreover, versioning consistency, e.g. across LLM checkpoints, retrieval indices, and custom tools, introduce ongoing maintenance overhead.

Compute and Infrastructure Costs

Although LLM cost is likely going to keep decreasing as AI is becoming commoditized, every agent call and retrieval query consumes compute cycles, driving up cloud expenses. Even lightweight reasoning or evaluation agents incrementally add to your monthly bill. Likewise, hosting and serving large vector stores or specialized databases demands substantial memory and I/O resources, further inflating infrastructure costs.

Alternatives

When the full power of Agentic RAG is unnecessary or too costly, consider these lighter-weight strategies:

Cache-Augmented Generation (CAG)

By maintaining a dynamic cache of recent prompts, responses, or their embeddings, CAG can instantly answer repeated or semantically similar queries without invoking a full retrieval+generation cycle. This leverages growing context windows in modern LLMs and can slash average response times for high-volume, repetitive workloads.

Domain-Tuned Models and Prompt Tuning

In narrow, stable domains, carefully crafted prompts or lightweight fine-tuning on a representative corpus can embed core knowledge directly into the model’s weights. Training a smaller, domain-specialized LLM reduces dependence on external retrieval, and offers faster throughput and simpler infrastructure.

Hybrid and Multi-Stage Retrieval

Instead of separate dense and sparse retrieval Agents, hybrid pipelines fuse keyword and vector search in one step, achieving high recall and precision with lower complexity. Alternatively, a coarse-to-fine two-stage retrieval, such as first filtering with a fast, low-cost index, then applying dense embeddings to a narrowed candidate set, balances latency and accuracy.

Knowledge Distillation & Embedding Compression

Vector store footprints can be reduced via clustering, quantization, or distillation techniques, speeding up nearest-neighbor lookups and lowering memory requirements.

By recognizing these limitations and blending in alternative methods, you can tailor your retrieval-augmented solution – whether Agentic or otherwise - to the precise demands of your domain, performance goals, and budgetary constraints.

Summary

While RAG has bridged the gap between static LLMs and domain-specific and changing knowledge, it still handles retrieval with a one-shot approach. In this article, we introduced Agentic RAG, where autonomous “Agents” dynamically decide when and what to fetch, how to reformulate queries, and even when to call in specialized tools or human experts. We explored a range of approaches, from input routers that break down complex questions into smaller, retrievable sub-tasks, to evaluators that re-rank and refine responses, and meta-controllers that ensure safety and maintain audit trails. Along the way, we also addressed the added complexity and overhead that come with these powerful capabilities.

For companies keen to unlock real productivity gains, implementing Agentic RAG means starting small: deploy an Input Agent to smartly route customer questions, plug in a retrieval evaluator to ensure only the best sources enrich LLM answers, and add a lightweight critic or tool-calling Agent for domain-specific tasks (e.g. flexible document parsers, such as Vision Language Models). Layer on a simple meta-controller to monitor costs and catch errors, and you’ve transformed static chatbots into self-correcting, human-in-the-loop assistants. As vector stores become smaller through condensation techniques and multi-stage retrieval reduces latency, Agentic RAG systems will grow more efficient. This progress paves the way for truly autonomous AI workflows, enabling teams to interact efficiently with their data and drive large-scale innovation.

Share this post: