From RAG to GraphRAG

The Next Step in Smart Knowledge Retrieval

- Published:

- Author: Lenny Julien Klump

- Category: Deep Dive

Table of Contents

![From RAG to GraphRAG From RAG to GraphRAG: The Next Step in Smart Knowledge Retrieval, Deep Dive, Lenny Julien Klump, Alexander Thamm [at]](/fileadmin/_processed_/2/9/csm_from-rag-to-graph-rag-lenny-klump-alexander-thamm-at_90b7865e7c.jpg)

GraphRAG represents a key evolution in Retrieval-Augmented Generation by shifting from flat text chunks to structured knowledge graphs. This approach enables large language models to retrieve and reason over interconnected information, leading to deeper insights and more coherent, context-aware responses. As a result, GraphRAG is emerging as a powerful solution for complex information retrieval in business and beyond.

What is Retrieval Augmented Generation (RAG)?

ChatGPT conquered the world in a heartbeat, reaching 1 million users within just five days in 2022, bringing Large Language Models (LLMs) into the mainstream. Today, LLMs have gained widespread popularity, and companies were quick to recognize their potential.

However, traditional LLMs have a significant limitation for corporate use: They lack internal or domain-specific knowledge, which is typically solely present in company documents and databases.

Retrieval-Augmented Generation (RAG) aims to overcome this limitation and has already been successfully applied in various industries and settings. RAG enhances LLMs by providing targeted contexts through the integration of knowledge bases. Here's how the traditional, vector-based RAG works in three key steps:

- Preparation (one-time): Documents are split into small text chunks, which are converted to numerical representations (embeddings), and stored in a vector database.

- Retrieval: When a user writes to the system, that query is also converted into a numerical representation. This allows to determine similarities between the query and the text chunks. Relevant pieces of text, which are thematically close to the user enquiry, can then be collected from the vector database.

- Generation: Those retrieved text chunks (or the whole logical section where they come from) serve as additional context for the LLM. Guided by a prompt, the model synthesizes these contexts to produce a precise, knowledge-based response.

These key steps of the vector-based RAG approach are visualised in Figure 1.

What are the Main Limitations of RAG?

Modern RAG systems have revolutionized knowledge access and can function very well in specified environments. However, the approach reaches its limits in settings with distributed knowledge, layered reasoning, and as the scale increases. Shortcomings of the traditional RAG approach include:

- No Deep Understanding: Vector-basedRAG usually finds text based on how closely it matches the wording of the user's question. This can lead to missed information, e.g. when the same idea is expressed in different words, when meaning is expressed implicitly or hierarchies are involved. This limitation stems from the fact that vector-based RAG treats documents as unstructured text, without awareness of relationships or context.

- Fragmented Information: The retrieved chunks are retrieved independently, often from different sources. This can lead to fragmented and/or contradictory responses.

- Weak Global Reasoning: RAG is not well suited for global queries such as “What are the main themes in this dataset?”. This is because the system relies on specific text chunks and does not construct a sound global representation of the data.

- Scalability Challenges: When the amount of information in the vector database grows, RAG may overlook relevant information, since it only retrieves a fixed number of top-matching results. Additionally, most RAG systems do not have an efficient mechanism for structured indexing.

What is GraphRAG, and how does it Address RAG’s Shortcomings?

GraphRAG was introduced by Microsoft Research in the paper From Local to Global: A GraphRAG Approach to Query-Focused Summarization (2024) and describes a more advanced retrieval approach trying to overcome the deficiencies of the traditional method mentioned above.

While the traditional RAG approach excels in settings with structured FAQs or flat documents, GraphRAG’s key strengths lie in its ability to answer global searches like “What are the main themes in Topic X” and, more importantly, in interpreting narrative or connected data by modelling key entities and relationships between them.

The core concept of GraphRAG is leveraging the capabilities of Graphs G = (V, E) that consists of Vertices (or Nodes) V which are connected through (weighted/directed) Edges E. In GraphRAG, entities are denoted as nodes and their relationships as edges. In Figure 2, an example knowledge graph for a technology company is shown.

GraphRAG was designed to reason over interconnected data points. The following example illustrates the difference from traditional RAG and is based on the fictional company showed in the graph in Figure 2.

Scenario: A technology company is investigating inconsistent battery life in one of its smartphone models.

Using a traditional RAG system, the model retrieves documents such as customer support tickets, a Q&A entry on battery performance, and a supplier invoice. While each document is relevant, they are presented in isolation, making it hard for the system to connect the dots.

With GraphRAG, the same data is structured into a knowledge graph, a representation consisting of nodes and edges connection them. Entities such as offered products, components, suppliers and vendors become nodes, linked by edges representing relationships like produced by or type of (Figure 2). When queried, GraphRAG can traverse these connections and reveal detailed insights such as all affected devices share a common component: Battery Type x from Supplier y pointing to a clear, transparent potential cause for the battery issues.

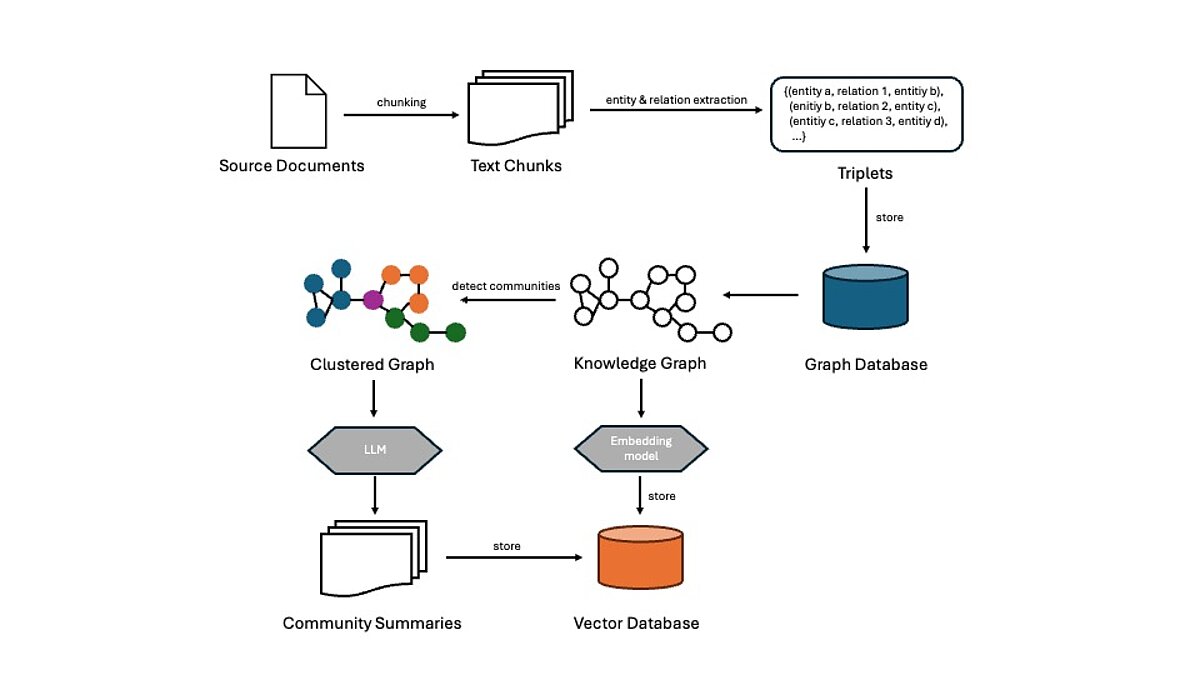

How does the GraphRAG Pipeline Work?

Text Chunking

Like standard RAG, the first step to GraphRAG is splitting large text documents into smaller, manageable chunks, facilitating detailed analyses. These text chunks might be sentences, sections, or other logical units.

Entity and Relationship Extraction

Next, relevant entities mentioned in the documents (e.g. departments, vendors, products) and their relationships (e.g. product A is produced by vendor B) need to be identified and extracted.

Traditionally, this was done through Natural Language Processing (NLP) techniques such as Named Entity Recognition (NER) combined with relation extraction. More recently, LLMs are becoming powerful enough to perform this task with a well written prompt and clear output schema. New tools and packages such as the LLMGraphTransformer by LangChain can simplify this process even further.

The extracted results may then be organized into triplets G = {(h, r, t)} consisting of head entity, relation, and tail entity e.g. (Berlin, capital of, Germany).

Graph Construction and Storage

The extracted triplets can then be represented in a knowledge graph where entities are nodes which are connected through edges, expressing relationships. Storage, visualization and efficient querying is done in specialized graph databases such as Neo4j, Amazon Neptune or Memgraph.

Optional: Node Embeddings

Semantic search enables content retrieval based on meaning rather than relying on exact keyword matching, which is typically used in graph database queries. This allows the system to return results which are conceptually related to the user input, even if the wording differs. For example, a query like “Who makes the battery in Model A” can still retrieve the correct result even if the graph only contains the triplet (Supplier Y, supplies, Battery X).

This flexibility is particularly valuable for localized queries where users are looking for specific pieces of information. To enable such search capabilities, graph nodes can be encoded as vectors using models like Node2Vec or GraphSAGE.

While vector-based RAG also uses embeddings to enable sematic search over text chunks, it lacks an understanding of how concepts are connected.

In contrast, the embedding models used in GraphRAG capture not only the textual attributes of the nodes, but also their structural context by incorporating relationships with neighbouring nodes.

Optional: Community Detection & Summarization

For optimal retrieval quality through even richer context, graph clustering techniques such as the Leiden algorithm can be used to detect tightly connected groups of nodes. Those communities often reflect subtopics or shared themes within the knowledge graph (e.g. a specific department). After detecting the communities, an LLM can be used to generate summaries containing key entities, relationships and other information for those clusters.

The created summaries can then also be embedded for semantic retrieval and/or saved as a lookup table with identifiers stored as node attributes.

Retrieval Through Traversal

Once the knowledge graph has been constructed and stored in a database, the system is ready to process questions from the user. The following process is applied to retrieve relevant information:

- Entity identification in the Query: First, the system identifies entities that are mentioned in the user’s query, e.g. “What are the features of Model a?” In this case, the entity is Model a.

- Semantic Search: If the graph nodes were embedded into a vector space, the system can retrieve relevant entities or summaries, even if the user’s formulation differs from stored labels.

- Graph Traversal (Local Seach): For targeted questions, the system starts from the identified entities and traverses the graph to collect multiple connected nodes and relationships.

- Community Summaries (Global Search): For broader queries such as “What is the structure of the Marketing Department?” the system fetches the pre-generated community summaries to deliver informed answers.

- Context Aggregation and Ranking: All the retrieved information such as facts, graph paths, summaries etc. are scored for relevancy and only the highest scoring content is then passed back to the LLM for answer generation.

Answer Generation

Lastly, the most relevant retrieved information and the original user question are given to the LLM. The model then generates a grounded and informed answer to the user query.

An exemplary workflow is shown in Figure 3. Variations are possible, for instance, node embeddings or community summaries may be stored as node attributes directly in the graph database. Alternatively, some systems do not use embeddings altogether and rely purely on structural reasoning via graph traversal, schema-based filtering, or predefined query paths tailored to domain-specific relationships.

How do Companies Gain a Competitive Advantage with GraphRAG?

GraphRAG combines the capabilities of LLMs and knowledge graphs to deliver more accurate and comprehensive responses. This is especially the case for complex and layered questions. In a business setting, this translates to tangible benefits such as more informed decision-making, as responses are bothprecise and context aware. Additionally, delivering accurate answers to complex queries enhances customer satisfactionand trust in AI-driven solutions.

Since information is retrieved from a graph, results can be traced and explained through the paths taken. This makes the system’s reasoning transparent, which is especially valuable for meeting regulatory requirements and ensuring compliance.

Beyond accuracy and explainability, GraphRAG also supports scalability. Graph structures allow knowledge to be indexed and organized efficiently, even as the dataset grows. In vector-based RAG systems, accurate retrieval becomes increasingly harder as the data volume grows. GraphRAG’s more targeted retrieval approach reduces the amount of irrelevant retrieved information in a data-heavy environment. For businesses, this results in lower operational costs since efficient data retrieval and processing lead to lower computational expenses and resource usage.

Where can GraphRAG be Applied?

While the traditional RAG approach works well for Q&A over flat documents without hierarchy, GraphRAG is designed for environments where data is highly connected, structured around entities and relationships, or distributed across multiple sources. By leveraging knowledge graphs, the system can reason over linked information, making it well-suited for use cases where traceability and relationship awareness are critical.

Example applications across industries include:

- Financial Services: Accounts, institutions and people can be modelled as entities, with relationships such as transactions and ownerships as edges. GraphRAG can analyse, summarise and interpret relevant parts of the network to help e.g. with fraud prevention or risk analysis.

- Manufacturing: Root-cause analysis and maintenance planning can be supported with relevant insights from distributed sources like vendor logs, maintenance records or system dependencies.

- Enterprise Knowledge Management: Internal Assets such as documents, presentations, and code can be organized by topic, department, or project in a graph. GraphRAG enables precise, context-aware insights across silos.

- Healthcare: Connecting patient records such as symptoms, lab results, and medical history with relevant research and clinical guidelines allows the retrieval of trustworthy context, assisting in diagnoses.

What are the Key Challenges of a GraphRAG Implementation?

Implementing GraphRAG in a corporate setting can offer significant benefits such as better contextual understanding, improved retrieval accuracy, and transparency in decision-making. However, the following obstacles can be challenging to overcome when implementing such a solution:

Graph Construction & Maintenance

The performance of the system is highly dependent on the quality and consistency of the constructed knowledge graph. Companies usually deal with diverse data sources (documents, presentations, databases, vendor logs, support tickets, …). Integrating these various sources into a coherent knowledge graph can be a complex task requiring extensive data cleaning, transformation and standardisation.

Furthermore, keeping the graph up to date is crucial. New data must be integrated properly, and existing relationships should stay accurate as the systems evolves. Without ongoing governance, the graph can quickly become outdated, reducing the output’s quality.

Performance and Scalability

As more data is integrated in the knowledge graph, query performance can suffer. This is because traversals in a convoluted network can introduce latency in a real-time setting. For optimal performance, schemas and indexing strategies need to be defined carefully. Also, including hybrid approaches combining graph traversal with vector searches are possibilities to balance accuracy of the system and fast responses.

Security and Compliance

Like any system used in an enterprise context, GraphRAG must adhere to security and compliance standards. Depending on the application, a graph might link people with monetary transactions, health records or other sensitive information. This could explicitly visualize insights that were not exposed before, making strict access control and privacy-preserving data processing crucial.

Requirement of Specialised Expertise

Successfully implementing a GraphRAG system requires a team with expertise in various disciplines, namely: natural language processing, graph construction, prompt engineering, software engineering and database management.

As a leading Data and AI consultancy, [at] brings together this expertise and actively supports organizations in designing and deploying tailored solutions from early-stage strategy to production-ready implementation.

Why is GraphRAG the Next Step for Smarter Knowledge Retrieval?

As businesses continuously strive to extract more value from their data, Microsoft Research presented GraphRAG as a novel framework, extending the capabilities of LLMs toward true contextual reasoning.

Traditional vector-based RAG extends the capabilities of LLMs by making external or company-specific knowledge available, enabling source-backed answers even in regulated settings.

GraphRAG builds on this foundation, specializing in environments where relationships between entities, transparency and context are crucial. Information is structured as a knowledge graph, allowing LLMs to reason over interconnected entities, delivering more accurate, contextual and explainable outputs.

As the technology and tools mature, current limitations such as the graph constructing will be addressed, with LangChain’s LLMGraphTransfomer setting an example for this evolution. Furthermore, Agentic AI systems offer the potential to facilitate tasks such as automating the data processing or maintaining the graph.

Since the original GraphRAG approach was published, a lot of research was done in the field. LightRAG, PathRAG, PolyG and E2GraphRAG are major breakthroughs and were published in the past few months. All these approaches leverage graph structures in different ways to offer unique strengths such as dynamic updates, token efficiency, speedups and scalability.

In summary, combining LLMs capabilities with knowledge structured in graphs is a development that offers unique advantages and is the next logical step for information retrieval in the business context.

Are you interested to know if your business is ready for GraphRAG and how it can benefit from it? Contact us today for a free consultation!

References

Papers:

From Local to Global: A Graph RAG Approach to Query-Focused Summarization (https://arxiv.org/abs/2404.16130)

LightRAG: Simple and Fast Retrieval-Augmented Generation (https://arxiv.org/abs/2410.05779)

PathRAG: Pruning Graph-based Retrieval Augmented Generation with Relational Paths (https://arxiv.org/abs/2502.14902)

PolyG: Effective and Efficient GraphRAG with Adaptive Graph Traversal (https://arxiv.org/abs/2504.02112)

E2GraphRAG: Streamlining Graph-based RAG for High Efficiency and Effectiveness (https://arxiv.org/html/2505.24226v1)

Implementation Examples:

https://python.langchain.com/docs/how_to/graph_constructing/

https://python.langchain.com/docs/integrations/retrievers/graph_rag/

https://neo4j.com/docs/neo4j-graphrag-python/current/user_guide_rag.html#graphrag-configuration

https://github.com/microsoft/graphrag/blob/main/RAI_TRANSPARENCY.md#what-is-graphrag

Blogs:

https://www.chitika.com/uses-of-graph-rag/

https://memgraph.com/docs/deployment/workloads/memgraph-in-graphrag

https://atos.net/en/blog/graphrag-transforming-business-intelligence-retrieval-augmented-generation

https://machinelearningmastery.com/building-graph-rag-system-step-by-step-approach/

Videos:

GraphRAG Explained: AI Retrieval with Knowledge Graphs & Cypher: https://www.youtube.com/watch?v=Za7aG-ooGLQ

Graph RAG Evolved: PathRAG (Relational Reasoning Paths) https://www.youtube.com/watch?v=oetP9uksUwM

Graph RAG: Improving RAG with Knowledge Graphs: https://www.youtube.com/watch?v=vX3A96_F3FU

Share this post: