Chatting with Tables

Query Data with Agentic AI

- Published:

- Author: Dr. Stefan Lautenbacher

- Category: Deep Dive

Table of Contents

In today’s data-driven world, businesses rely heavily on tabular data to make informed decisions. From machine data to sales figures and supply chain metrics, spreadsheets and databases hold a wealth of information. Yet, accessing and benefiting from this data often requires technical expertise with specialized software or with coding languages like SQL or Python. What if extracting actionable insights could be as simple as typing a question into a chatbot interface? Enter the new chat application that draws on an agentic setup to provide answers from tabular data, based on natural language queries.

Accessing Company Knowledge Effortlessly

Indeed, at [at], we envision a future where businesses can effortlessly access all their company knowledge through simple chat applications. This vision starts by helping our clients access their explicit knowledge – the structured and documented information already available within their organization.

In contrast, implicit knowledge is gained through experience and is often harder to capture in written form. However, it can still be gathered and transformed into explicit knowledge in a second step—for example, by analyzing recorded videos or similar formats.

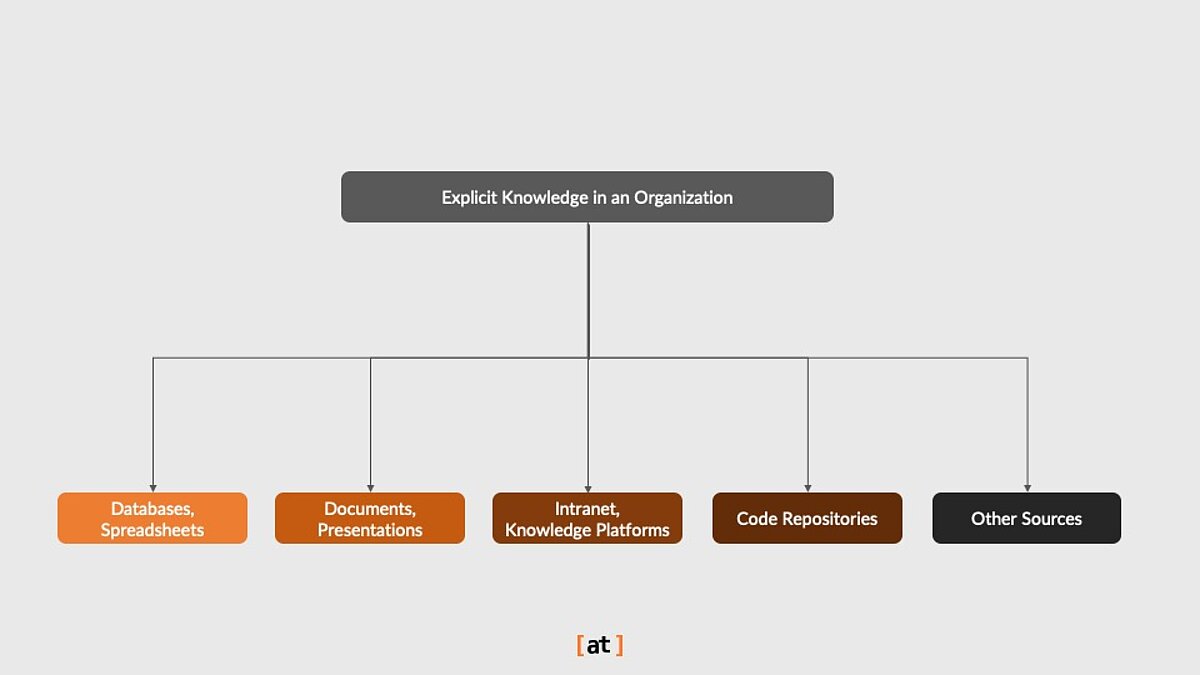

Explicit knowledge resides in a variety of formats, including databases, spreadsheets, documents, presentations, intranet and knowledge platform pages, code bases, and more (see Figure 1). For efficient retrieval, we differentiate between the two most common types of data:

- Text-based data can be effectively accessed via Retrieval-Augmented Generation (RAG)

- Tabular datais most flexibly queried using an Agentic chat solution (executing SQL or python code)

The reason for this distinction is that the vector search technique used in most RAG systems works best with the sequential nature of text that can be chunked and embedded into a vector space. In contrast, related pieces of information may not be arranged sequentially in tabular databases or spreadsheets. An agentic chat application can best retrieve the relevant information from different locations in one or more data tables.

To address the different ways of chatting with data, we are launching a blog series exploring three innovative approaches that unlock explicit knowledge in your organization:

- Agentic Chat with Tabular Data: Simplifying access to tabular data through natural language queries.

- GraphRAG: Harnessing the power of graph structures for knowledge retrieval.

- Agentic RAG: Leveraging agent-driven methods to extract and interpret knowledge from complex sources.

In this blog, we start with the Agentic Chat-With-Tabular-Data application. It demonstrates how natural language interfaces can revolutionize the way organizations interact with their tabular data.

How to Chat with your Tabular Data

At the core of a chat application for tabular data lies an Agentic setup based on Large Language Models (LLMs). In contrast to simple RAG systems for chatting with text data, AI Agents are needed in the multi-step process of flexibly retrieving information from tabular data via chat. Here is how the retrieval process works:

- Natural Language Processing: The system processes and interprets user input queries written in plain language, such as, “What are the most important orders to send out today?”

- Dynamic Query Generation: Based on the user input, the application creates SQL or Python queries and executes them on connected databases or Excel-type spreadsheets.

- Answer in Natural Language and Optional Visualization: The results from the data queries are returned to the user in the form of a natural language answer to the input prompt. Beyond plain text output, the chat application can optionally generate charts, tables, or graphs to present data more effectively.

This seamless interaction removes the technical barrier, enabling internal and external users of an organization to access tabular data for a variety of use cases.

The Agentic Teamwork Explained

The chat application for tabular data is powered by LLM-based agents that work together as a team to answer the user question. Agents are basically Large Language Models that fulfill specific roles that need to be defined once in their so-called system prompt. These Agents communicate with each other and are equipped with different tools (i.e. python functions). For an introduction to multi-agent LLM systems and a more detailed overview of different architectures of multi-agent systems, visit our company blog.

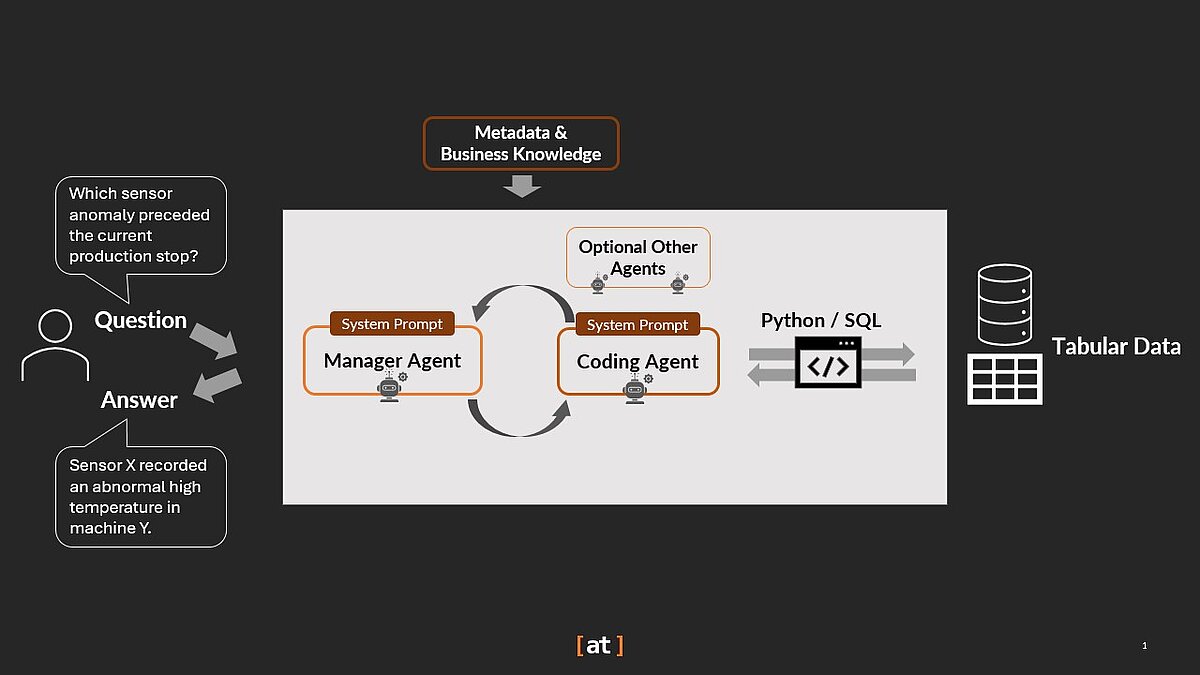

In our case, the individual LLM-based Agents work together to answer a question based on tabular data. The specific Agentic set-up can vary, but typically we would expect at least a manager Agent and a coding agent to be part of the Agentic team. The manager Agent receives the question from the user and makes a plan by translating it into actionable tasks for the other Agent(s). According to these tasks, the coder Agent generates code and executes it on tabular data. If the result is satisfactory to the manager Agent, it summarizes the findings from the data retrieval and returns it to the user (see Figure 2).

Besides containing the individual role definition, the Agents’ system prompts need to be enriched with metadata such as general business context information, the database schema, and an explanation about the names and the content of all columns. In addition, business knowledge can be included into the system by introducing a separate knowledge agent. Generally, including sufficient knowledge about the data to avoid ambiguity is crucial to correctly guide the agents’ actions and ensure efficient retrieval and analyses.

Regarding the LLMs themselves, there are also different options. Most notably, both the latest proprietary models – like Open AI’s GPT models accessed via an API – and open-source models hosted locally can be used as a basis for the Agentic system.

The Right Degree of Flexibility

Another important factor to consider for the design of the chat app is the trade-off between the flexibility of the system and its answer quality. Typically, a system that is less flexible in its inputs and in the choices Agents can make can be expected to create more accurate answers, especially if data quality is poor. If data quality is high and data is well-described, a more flexible setup has the advantage that the system can answer a wide range of user questions and that it can have the ability to recover from coding errors without human corrections. Thus, the right degree of flexibility to choose can vary, depending on the data quality and the use case.

For a very flexible system, with an unrestricted set of data queries as input, we need an Agentic team with many degrees of freedom, like the one depicted in Figure 2. In that case, the coder Agent needs to be able to flexibly generate python code or SQL queries, perhaps in multiple iterations until the manager Agent accepts the code output for the final answer. The wide range of choices the system can make may have an adverse effect on response time and answer quality, especially with low-quality and ambiguously described data.

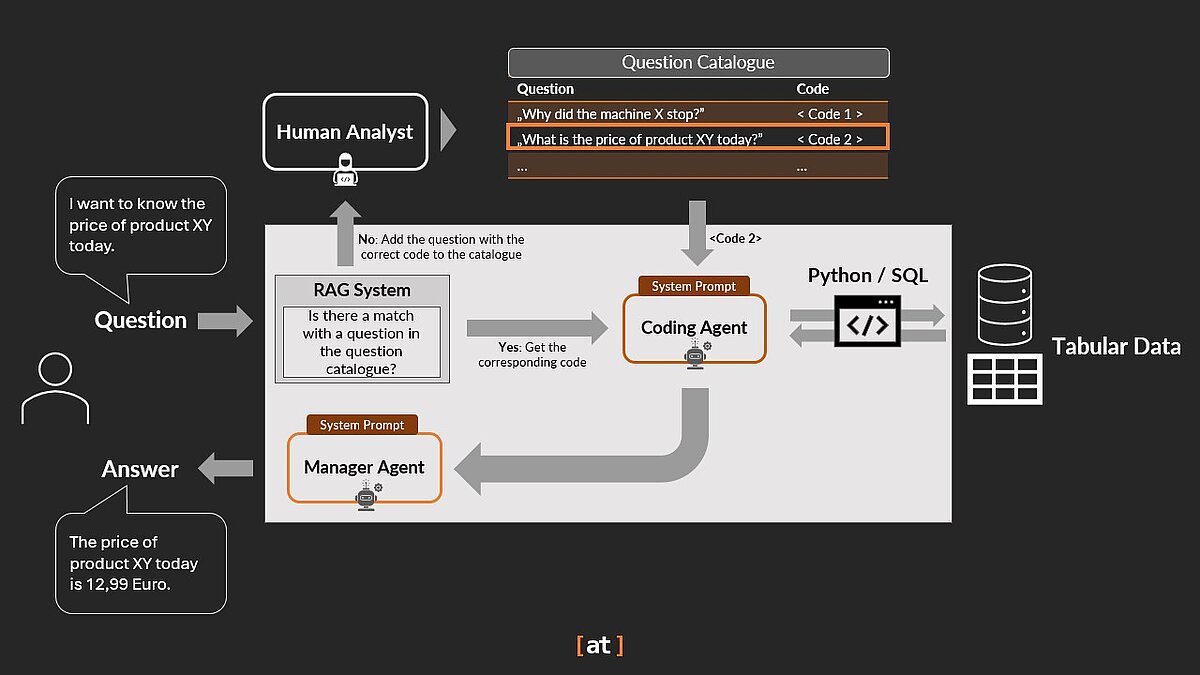

One way to improve answer quality is by limiting the range of possible questions to simpler, more structured queries. Alternatively, the agentic system can be made more deterministic by redesigning it to avoid generating and executing its own code on the data. For such a solution, one would create a catalogue of the most frequently asked user questions and their corresponding humanly created SQL or python code snippets that retrieve the correct data. The user questions of the catalogue would be embedded in a vector database for a RAG system. Any new query is then compared to the embedded catalogue questions in the vector database.

If there is a good match, the SQL or python code associated with questions in the catalogue is executed by the coder Agent and the result is summarized and returned to the user as before. If the user question cannot be matched with an existing question in the catalogue, a human analyst receives a ticket and adds the correct query code along with the new question to the catalogue. In this way, the catalogue grows over time, gradually covering a broader range of user questions. The corresponding chat solution is illustrated in Figure 3.

Real-World Use Cases Across Industries

[at] has demonstrated the versatility of Agentic chat applications in use cases with clients from various sectors, where it opens new opportunities:

- Consumer Goods: Customers can directly inform themselves about different products by using an Agentic chat app that can query a tabular database in the background: “When will this version of the bed be available again?”

- Logistics: Supply chain teams can simply prioritize orders via chat, for instance, “What are the most important orders to send out today?”

- Manufacturing: Engineers can quickly trace back errors in the production line, e.g. by asking “Which sensor anomaly preceded the current production stop”? For a more detailed description , see our reference on quality management at an automobile manufacturer and our blog post about AI agents and sensor data.

- Transport: Administrators can analyze and learn from customer satisfaction data, i.e. with ad-hoc questions like “What was the most frequent complaint last week in Berlin?”

These examples highlight how an agentic chat application transforms how your business and customers interact with tabular data, saving time and empowering your teams to make better decisions.

The Added Value for Your Organization

The added value of an Agentic chat app for tabular data stems from its unique strengths and from the option to combine it with a RAG system for retrieving information from text. The flexibility of a chat application for tabular data is especially valuable in dynamic environments, where the initial conditions of a process frequently change—such as in handling customer requests or prioritizing shipments.

It also excels at supporting ad-hoc data queries and analyses, for instance, identifying errors or malfunctions in a production system based on sensor data or other operational metrics. When enriched with information from manuals or documentation, the app can even suggest potential solutions—providing valuable support to technical staff on the ground.

Moreover, for applications that make information available to an organization’s members as well as for chatbots that reply to questions from customers, having access to tabular data – often in addition to text data from documents - can make a big difference in service quality and timeliness.

To sum up, the added value of a chat-with-tabular-data application materializes in

- Time Savings & Higher Customer Satisfaction: Reduced personnel time to answer employee or customer requests, while always being up-to-date with the latest data.

- Cost Savings: Lower personnel costs and shorter machine or system downtimes due to a faster tracing of errors.

- Quality Improvements: Assistance in improving processes, like the prioritization of shipments, to improve service quality and customer satisfaction.

- Improved Decision-Making: Faster access to tabular data insights allows for quicker and more informed decisions that increase efficiency.

Ensuring Trustworthy Operation Beyond Deployment

To ensure a user-friendly, compliant, and reliable operation, integration and security is key when deploying your chat application for querying tabular data:

- Productive Use and Integration: Ensure that the application integrates seamlessly with existing business processes and IT infrastructure. In addition, continuously evaluate and monitor the performance of the Agentic system based on pairs of questions and human answers about the tabular data.

- Data Security & AI Safety: Run the Agentic system in an isolated (sub-)network to prevent leaking data to third parties or the internet. Moreover, you may choose to automatically check for vulnerabilities before letting the Agents execute any query or code.

- Data Privacy: The application should adhere to data protection regulations such as GDPR by implementing measures like role-based access control and data masking. This secures the data from unintended access. Moreover, restrict database connection permissions to minimize risk. The Agentic system may generate SQL queries that modify or delete data. To prevent unintended changes, restrict the agentic SQL user to read-only permissions.

By implementing such measures, you can confidently deploy and operate the chat application while maintaining data privacy and security.

At [at], we have experience from 2,500 data and AI projects as trusted partners covering the whole Data Journey from Data Strategy to Data Science, Data Engineering, and DevOps / MLOps.

Conclusion

By combining natural language processing with intelligent query execution, Agentic chat applications make tabular data accessible for a variety of use cases in your organization. Whether it's augmenting automated customer support, enabling faster issue resolution for technical teams, or empowering stakeholders with instant insights, this technology opens the door to new levels of efficiency. Companies in today’s data-driven world that are ready to embrace this innovation can create a competitive edge by positioning themselves ahead of the curve - turning everyday data into a strategic asset for growth.

Are you ready to explore how this technology can create value in your organization? Let’s chat!

Share this post: