Deep Learning: Simply Explained

- Published:

- Author: [at] Editorial Team

- Category: Basics

Table of Contents

Artificial intelligence (AI) has experienced a significant surge in popularity in recent years. But behind this hype lies—at least in part—a particularly powerful form of machine learning: deep learning.

In this article, we provide insight into this subfield of machine learning: We explain what deep learning is, the fundamental models and architectures behind it, and what it is used for.

What is Deep Learning?

Deep learning is a type of machine learning that employs artificial neural networks to build models and discover complicated patterns in data.

In contrast to traditional machine learning, where we often need to perform complicated feature engineering, deep learning is much better at automatically learning features and finding patterns in raw data. This makes deep learning very useful for tasks where we have a large amount of unstructured data, like text, audio, and images.

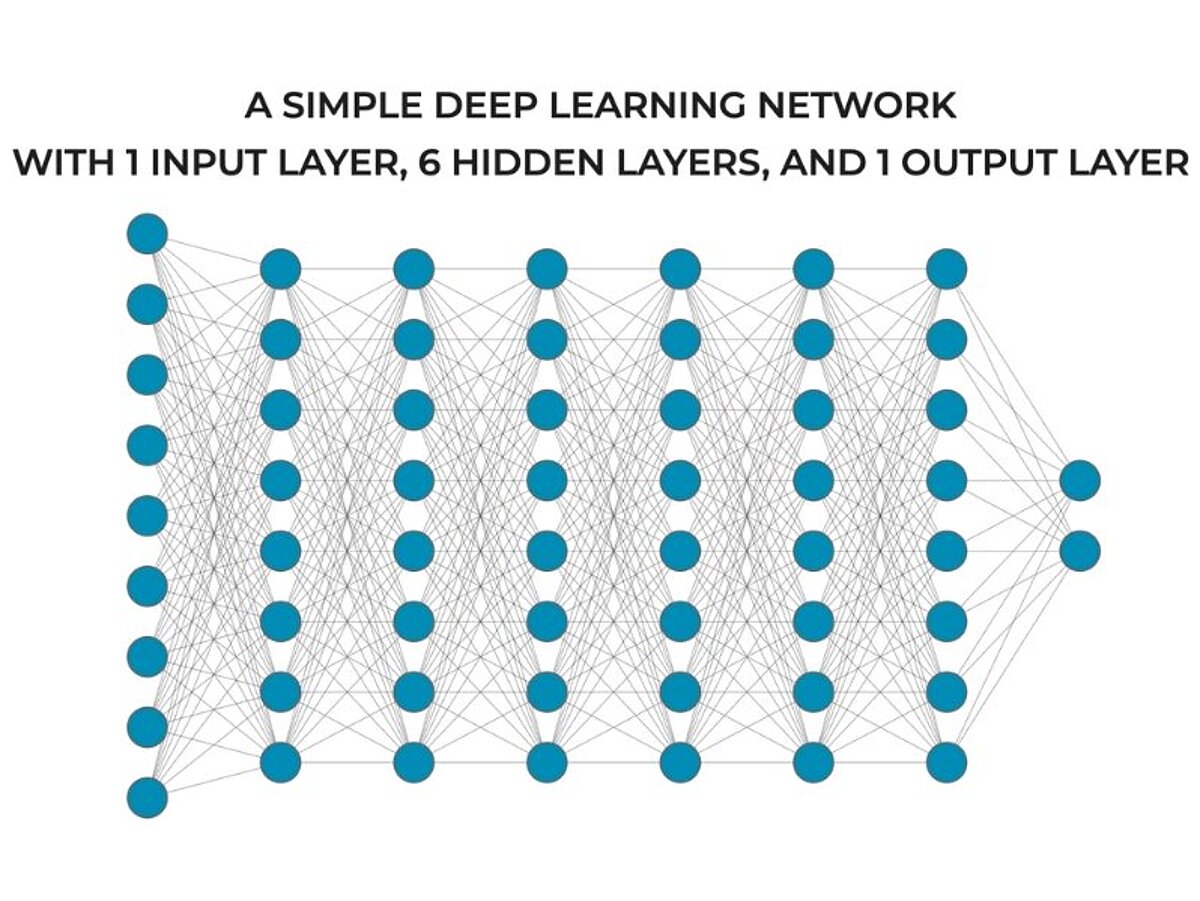

At the core deep learning is connecting artificial neurons, also called nodes, into large networks with many layers, where every layer has many nodes. This structure of multiple layers of multiple artificial neurons enables deep neural networks to process data hierarchically, where lower layers learn more general patterns in the data and upper layers learn more nuanced and abstract patterns.

This hierarchical data processing enables deep neural networks to learn much more complex patterns in large datasets. And in fact, typically the more layers the deep network has, the more complex patterns it can discover. This is where the word "deep" comes from in the name: they are "deep" because they have deep stacks of multiple layers. Deep refers to the relatively large number of layers (compared to previous networks). Deep neural networks excel in a variety of areas like natural language processing (NLP), computer vision, and autonomous systems. In fact, deep learning forms the basis of many revolutionary AI systems like ChatGPT and similar generative text systems, as well as autonomous vehicles.

But in spite of the power of this technique, it does have challenges. Deep learning typically requires very large datasets during training, as well as vast computational resources. These data and compute requirements are often very costly.

Deep Learning vs Artificial Intelligence vs Machine Learning

Artificial intelligence (AI) is the umbrella term for all systems that attempt to replicate human intelligence – for example, in learning, reasoning, or problem-solving.

Machine learning (ML) is a subset of this: here, systems learn by being trained with data, thereby improving their performance in specific tasks.

Deep learning (DL) is a specific approach within machine learning. It uses deep neural networks to recognize highly complex patterns in large, mostly unstructured data sets – for example, in language processing or autonomous driving.

Compared to traditional ML methods, deep learning can handle such highly complex tasks much better – provided that sufficient data and computing power are available.

Deep Learning vs Reinforcement Learning

Deep learning and reinforcement learning are two different tools in the machine learning ecosystem. As mentioned above, deep learning uses an architecture of deep stacks of neurons, and this architecture ultimately allows deep learning models to learn complex patterns in large datasets.

Reinforcement learning, on the other hand, is a technique that we use to train machine learning systems using feedback from an environment (e.g., a real world environment, a digital "environment," etc). In reinforcement learning, the system learns to make better predictions over time by getting rewards and penalties via a process of Trial-and-Error, interacting with the environment.

So while deep learning is best understood as a machine learning architecture, reinforcement learning is best understood as a training paradigm. Having said that, there is a type of machine learning called deep reinforcement learning that combines these two methods.

Deep Learning Models and Architectures

Although speaking broadly, deep neural networks are networks of artificial neurons organized in a hierarchical structure, there are in fact a variety of specific architectures for deep networks. And those different architectures have different functionality.

The 4 most important architectures are:

- vanilla deep feedforward networks

- convolutional neural networks

- recurrent neural networks

- transformers

Vanilla Deep Feedforward Networks

A vanilla deep feedforward network is the most traditional deep neural network. These networks consist of multiple layers of neurons, and in this architecture, the information only flows one way: forward from the lower layers to the upper layers.

In a vanilla deep network, every neuron of a layer also connects to every neuron of the next layer, making these networks "fully connected".

Vanilla deep networks are extremely versatile, and with a few modifications, can perform a variety of tasks like classification, regression, anomaly detection, and feature extraction.

Importantly, when used alone, deep vanilla feedforward networks typically operate on structured data (i.e., tabular data), but we can also use them as components in other architectures (like the Transformer architecture discussed below).

Convolutional Neural Networks

Convolutional neural networks (CNNs) use layers of neurons to detect spatial patterns, typically in grid-like data such as images.

Initial layers of a CNN typically identify simple patterns like lines with specific orientation, corners, and other spacial features. But when we organize the layers in stacks, convolutional neural nets are then able to identify more complex patterns.

Due to their usefulness with image data, we often use CNNs for tasks like image classification, medical imaging, and object detection systems.

Recurrent Neural Networks

We use recurrent neural networks (RNNs) for sequential data, like time series data and text data. In this type of data, the order (i.e., the sequence) of the data matters, and data at any given point might depend heavily on data at a previous point in the sequence (again: like text data).

To handle this type of sequential data with dependencies across time, recurrent neural networks include recurrent connections <em>backwards</em> into previous steps in the network, and other types of feedback loops. These feedback connections allow the model to learn dependencies and relationships between sequential data examples.

Specialized RNN variations like Long Short-Term Memory networks (LSTM) and Gated Recurrent Units (GRU) are structured to fix problems in simple recurrent neural networks, such as vanishing gradients.

The abilities of RNNs to model dependencies in sequential data – which arise from the recurrent structure – makes them very useful for tasks like natural language processing and financial modeling.

Transformers

The Transformer architecture is one of the most important architectures in machine learning as of the time of writing this article, because Transformers form the basis of GPT systems and modern generative text models.

Like RNNs, Transformers work exceptionally well on sequential data, and text data in particular. But Transformers replace the recurrent structure of RNNs with a special architecture unit called the attention unit.

The attention unit (AKA, attention mechanism) of Transformers specializes in learning contextual relationships between elements in a sequence. When organized into the larger Transformer architecture (which includes other units like deep feedforward networks) and when trained with very large amounts of data, self-attention enables Transformers to learn complex grammatical relationships in text data, which in turn, enables Transformers to understand and generate human language.

As such, Transformers perform extremely well in natural language processing tasks (NLP) like question-answering, text summarization, text generation, and translation.

Other Architectures

Although the architectures discussed above are some of the most important deep learning architectures, there are many other architectures that use deep networks, including generative adversarial networks, graph neural networks, capsule networks, and more.

Advantages and Challenges

Deep learning is a powerful tool to solve a variety of tasks, but although it has a variety of advantages versus other techniques, it also has weaknesses.

Advantages of Deep Learning

Let's first take a look at what makes deep learning so powerful and, in many cases, superior to other approaches.

Automated Feature Extraction

Deep learning models have the ability to automatically learn complex features from raw input data.

This can eliminate the need for manual feature engineering, and is particularly useful with unstructured data types like text, audio files, and image data.

High Performance on Complex Tasks

Deep learning models often achieve high performance on complicated or difficult tasks like image recognition, game playing, and NLP.

This high performance on difficult tasks emerges from the ability of deep learning models to model complex or non-linear relationships in input datasets.

Scales with Large Datasets

Deep learning systems often improve their performance as you increase the size of the dataset (i.e., more examples). This makes them particularly useful in domains with high volumes of data.

Moreover, this scalability is further supported by advances in compute hardware, such as TPU and GPU processors.

Versatility Across Domains

Deep learning is versatile and can be used across a wide range of fields, like healthcare, robotics, linguistics, entertainment, and finance.

This versatility is enhanced by specialized architectures for special types of tasks and data types, as noted above, for example with convolutional neural nets for image data.

Challenges of Deep Learning

But although deep learning has many strengths, it comes with some inherent challenges and weaknesses.

High Computational Requirements

Training deep learning systems often requires substantial computational power, memory, and other hardware.

For example, training deep neural networks often requires expensive GPUs and lots of RAM.

The expense of some of this hardware (particularly now, with the rise in popularity of generative AI) can create a barrier for firms with limited financial resources.

Need for Large Datasets

Although deep learning systems can learn complex relationships in input features, this typically requires large labeled datasets during the training process.

These large datasets can be difficult to acquire, and the labeling of these datasets often creates an additional challenge.

Training deep neural nets with small datasets can lead to overfitting and bad performance on model metrics.

Difficult to Interpret

It's often difficult, if not impossible, to know exactly how deep learning systems are processing their input data; they're typically considered to be “black boxes.” This is in contrast to systems that are easy to interpret (like decision trees).

The difficulty with interpreting these systems can create challenges in situations where it's important to be able to explain how the system works. This can even create legal issues, if it's unclear whether the model is processing data in a way that violates certain laws (e.g., financial discrimination laws in the USA).

Risk of Overfitting

The ability to learn complex patterns inherently comes with a risk. The higher the "capacity" of a model (i.e., the ability to model complex relationships), the greater the risk of overfitting.

This risk increases when you train deep learning systems with small datasets or imbalanced data.

Because of this risk of overfitting, deep learning practitioners often need to train these models with great care, and they often need to use regularization techniques as well as careful model validation.

Applications and Use Cases of Deep Learning

Deep learning is a powerful machine learning technique, with a wide range of uses and applications.

Computer Vision

Deep learning has driven a revolution in a variety of computer vision tasks like facial recognition, object detection, and image classification.

In particular, Convolutional Neural Networks (described above), are able to process and classify images with very high accuracy, which as a result, enables more complex applications like medical imaging and diagnostics, and autonomous vehicles.

Natural Language Processing (NLP)

Deep neural nets have also revolutionized natural language processing, as deep nets form the backbone of most modern NLP systems.

These deep learning-based NLP systems (including BERT and the Transformer architecture) are able to perform complex tasks like language-to-language translation, text generation, sentiment analysis, and more.

In turn, the power of these new deep learning-driven NLP systems is enabling a variety of higher-order applications like chatbots, virtual assistants, agents, and enhanced search.

Autonomous Systems

New drone systems and autonomous vehicles often employ deep learning to process the data from onboard sensors in order to make real-time decisions about how to operate.

These systems use deep learning in a variety of ways, like computer vision, object tracking, and trajectory prediction. This allows autonomous systems to navigate and make decisions in complex environments.

Further, these autonomous systems have downstream uses in industries like transportation, agriculture, and logistics.

Predictive Analytics and Recommendation Systems

In business, entertainment, and healthcare, deep learning-based systems can process user data and provide highly personalized recommendations.

For example, platforms like Amazon, Netflix and Spotify all use deep learning-based systems to predict the best content to recommend to users. In turn, this enhances user engagement and drives revenues.

Other Applications

We've really just scratched the surface here, in terms of applications. Deep learning has a wide range of other applications in areas like finance (fraud detection), generative AI for entertainment, speech recognition and synthesis, and many other areas.

Moreover, this is an evolving field, and new uses are being discovered and created regularly.

Conclusion

Deep learning has taken machine learning to a new level in many ways – especially when it comes to complex patterns in large, unstructured data sets. The technology is behind many of the most advanced AI systems currently available, such as those used in language processing and generative AI.

Despite its impressive achievements, however, deep learning is not a panacea: the costs of data, computing power, and model maintenance are high, and the risks, such as overfitting, are real. The method is therefore powerful, but not without challenges.

Share this post: