Model Context Protocol in the Spotlight

A New Standard for Connecting Services to LLM’s

- Published:

- Author: Dr. Bert Besser, Dr. Johannes Nagele

- Category: Deep Dive

Table of Contents

The Model Context Protocol (MCP), introduced in November 2024 by Anthropic, is a dedicated effort to advance LLM application architectures by integrating third party servers and external data, MCP aims to simplify and standardize application architectures, making it easier to connect with external data sources and third-party services. A new and widely adopted standard lets the available choice of sources and services flourish.

This article provides a high-level overview of MCP, making its key concepts and overall approach easy to grasp. Using clear and simple language, we will also address one technical angle: security risks and potential attack surfaces associated with MCP.

We’ll start by breaking down how MCP applications are structured, take a closer look at two of its core capabilities (function calls and data resources) and finish with a discussion of the main security challenges developers should keep in mind.

Model Context Protocol Architecture

A 10,000 Feet View

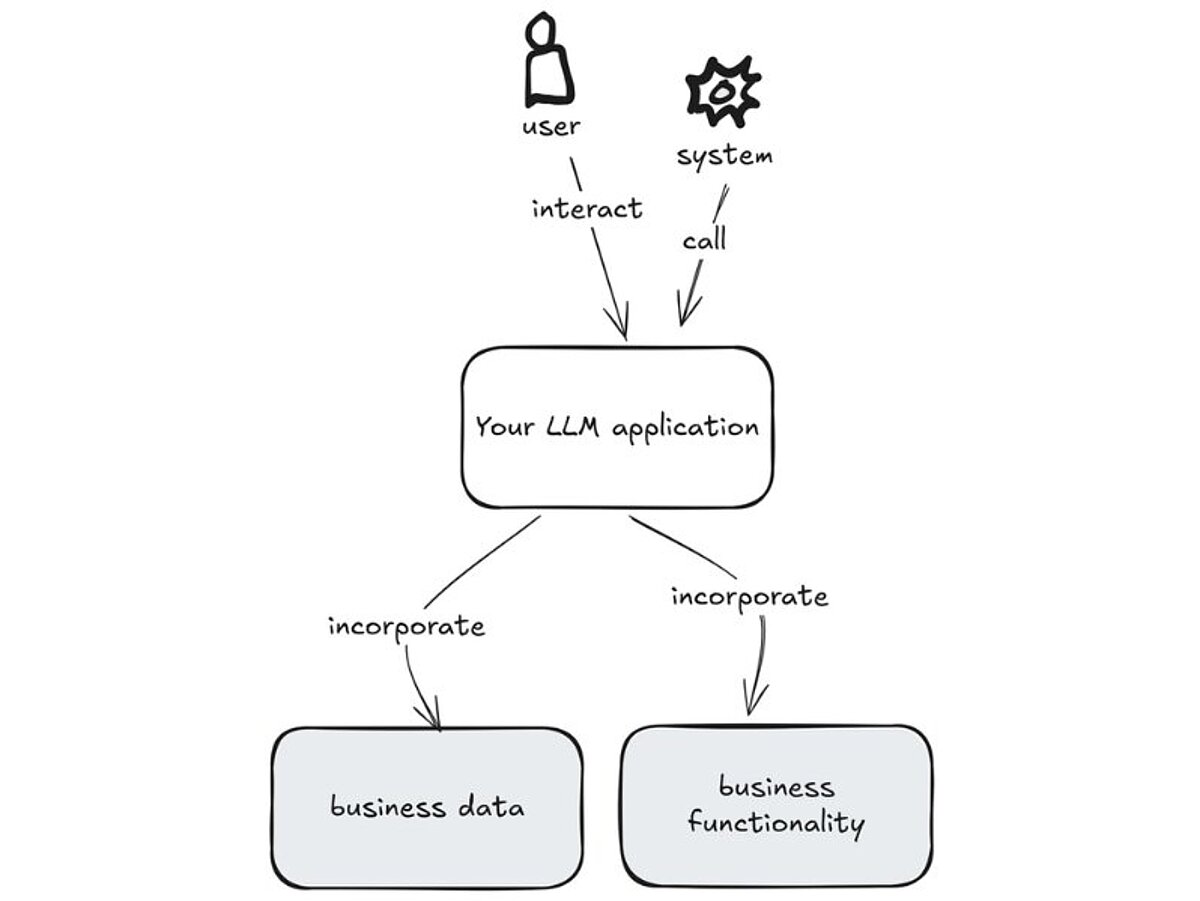

Connect your LLM application to business functions and data using a standardized approach – that is what the Model Context Protocol (MCP) enables. MCP represents a significant step toward making this integration easier and more consistent.

The next figure gives a simple, high-level overview of MCP. Each following figure then adds another layer of detail, gradually “zooming in” on how it works.

A 1.000 Feet View

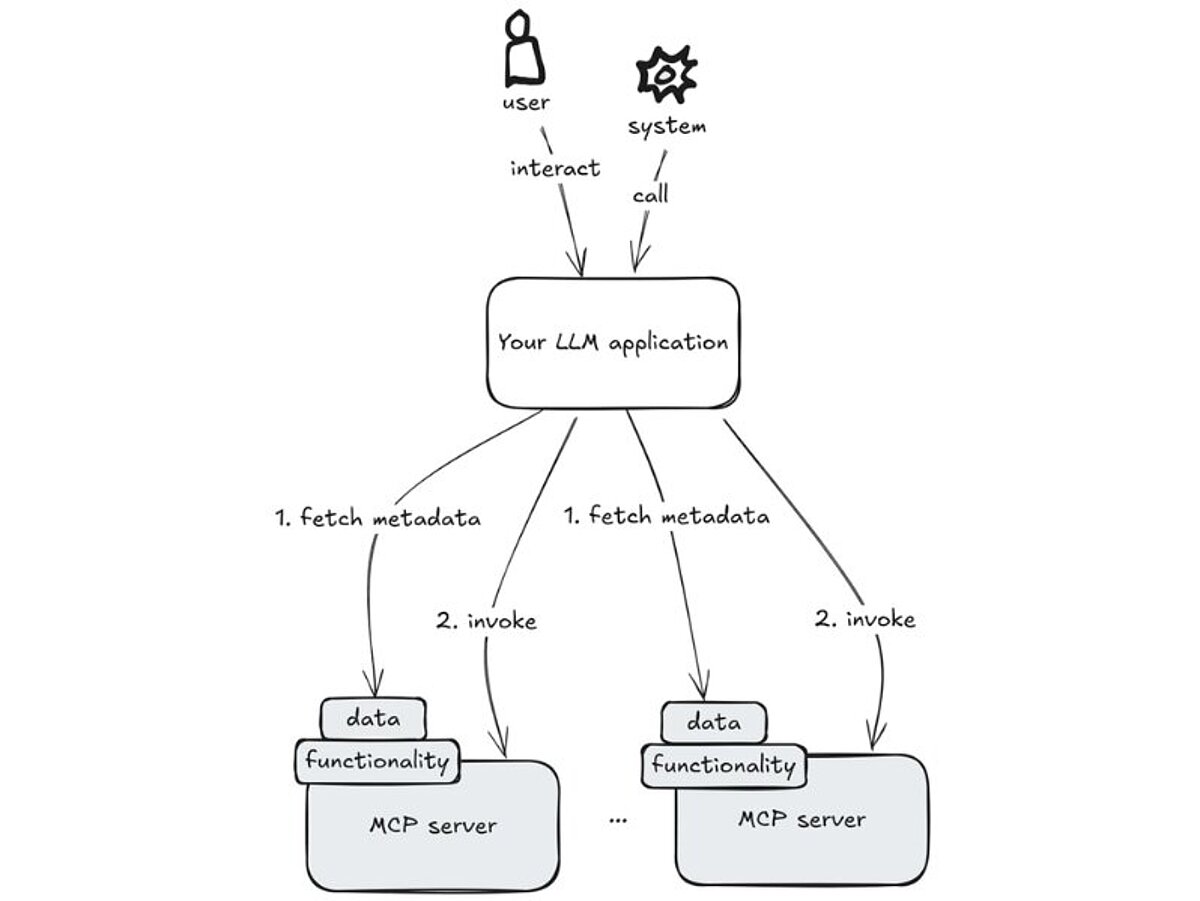

Your LLM application does not just call LLM APIs. It also interacts with additional third party (or custom) servers, gathers (text) data from all of them, and orchestrates these calls to generate a meaningful, unified response. In particular,

- The application implementor (i.e., you) controls what additional servers to allow.

- Each additional server exposes metadata about the provided data and functionality.

- Your application invokes the additional servers as needed to trigger functionality and receive their results, and to retrieve data. Which data or functionality to invoke is guided by metadata (i.e., you don’t have to explicitly implement the choice or calls).

In a way, you transfer some control of what functions and data to “invoke” to your application and have to design your application’s prompts and other moving parts to guide this autonomous behavior in the desired direction.

A 100 Feet View

Under the hood, your application will instrument LLMs not only with the task at hand but will also ask them to select some data or functionality to call (if any). This decision is implemented by providing the LLM with metadata fetched from the servers, i.e. metadata about available data and functionality. If the LLM decides “yes”, your application will conduct the actual selected invocation “on behalf of the LLM”, receive either data or a function result, and pass all that back to the LLM. (Note that the LLM retains the previous conversation history in the prompt context.) What the LLM responds next may either be the final answer of your application, and is returned to the user, or it will start “another round” of invoking servers.

A sample application to experience and test-drive this behavior is Claude desktop.

All this is readily implemented in MCP SDKs such as the open-source reference implementation in Python, hence your focus can be on providing suitable prompts and servers exposing suitable data and functionality.

This article does not discuss specifics of available SDKs or give code samples. Focus is on insights in how MCP is targeted to extend the technical and organizational fundamentals of how LLM applications will work worldwide in the future. If you are interested to learn more about the technical aspects of MCP, such as protocol layers and lifecycles, this article provides detailed insights.

Applications and use cases

As the Model Context Protocol (MCP) standard is still in its early stages of evolution and related developments are very fast-paced, there is limited enterprise-grade application experience available. Further, recent security findings suggest that applicability is still restricted. Despite all this, MCP gains much traction, as can be seen for example from Microsoft announcing to create a C# SDK or OpenAI as well as Google (MCP Toolbox for Databases (formerly Gen AI Toolbox for Databases) now supports Model Context Protocol (MCP) | Google Cloud Blog) and IBM (Unleashing the productivity revolution with AI agents that work across your entire stack) as well.

As MCP adoption grows, understanding its potential applications becomes increasingly important. The following section offers an overview of key use cases and application areas.

Put simply, MCP acts as a middle layer between your application and any REST APIs, making it easier to integrate business servers into LLM applications. Hence, you can have your application interact with:

- JIRA,

- Hubspot,

- AI agents,

- model registries,

- time trackers,

- chat platforms,

- container orchestrators,

- integration automation platforms,

- notification services,

- document stores,

- databases,

- code repositories,

- wikis,

- anything that exposes a REST interface. (There is even experimental MCP servers meant to do just that—add MCP support to any REST service).

Additionally, an MCP server exposes so-called resources, representing business data in both textual and binary formats, such as database entries, documents, images, or PDFs. Your application will fetch the metadata about available resources from an MCP server as needed to respond to the given task.

Dynamic Functionality and Resources

The data an MCP server provides can change over time, as it typically reflects the current state of a system. For example, a chat app might use MCP to look up recent alarms from a database or to search for staff with specific skills, including newly onboarded team members.

Example Use Case

An example use case for an LLM application using MCP servers is enhancing an automated workflow, such as in a CRM, by adding a step that provides human service agents with pre-generated replies to incoming customer inquiries. In this scenario, an MCP server might retrieve the customer's recent communication history from a NoSQL document store. To evaluate the solution’s impact, metrics like operational efficiency or output relevance could be used.

Security Concerns

Of course, the typical and widespread security concerns apply to the functionality and data exposed by MCP servers: Access control, encryption, audits, rate limits, and generally keeping the attack surface as small as possible.

There are also newer types of security concerns, mainly focused on tricking LLMs into unintended behavior. While these risks aren't unique to MCP, its growing adoption increases the overall attack surface. One such attack is called tool poisoning where an MCP server exposes fraudulent metadata. The metadata about available tools is sent to the LLM and should allow it to choose the adequate one. This text description can contain malicious instructions. An example is to have an LLM application expose sensitive data: The authors tricked the Cursor IDE agent to send security keys to the attacker. Another example is an MCP WhatsApp server sending private information to an unknown phone number (from the attacker).

Authorization in MCP servers also presents a potential attack surface, as highlighted in this article, and will need to be strengthened for widespread enterprise use.

Conclusion

MCP enjoys rapid adoption, as can be seen with OpenAI integrating MCP support in their SDK. It is likely that MCP is bound to be a game-changer in the architecture of LLM applications, and investing in analysis of its potential applications for your business is advised. However, employing it in unknown environments, using un-trusted/un-audited MCP servers, is a security concern. Custom MCP servers that do not alter system state but only extract or annotate with additional metadata, together with LLM applications of restricted blast radius, are a possible path forward in analysis and possible adoption.

Other new “Agentic Protocols” also emerge, like ACP or A2A, and their adoption compared with MCP will have to be monitored and evaluated. New protocols are tailored to different needs, like linking AI Agents to resources, enabling Agents to interact with each other, or connecting them with humans. In the future, choosing a protocol will not be an “either-or” decision, but will depend on the goals and circumstances at hand, as Figure 4 in this survey suggests.

Share this post: