Inhaltsverzeichnis

The magic of the machine

People can explain most of their actions well: We go through the traffic light because it is green. If we want an explanation for an action of a fellow human being, we usually just ask. Our own introspective experiences with our bodies, as well as observation and interaction with the environment, allow us to understand and communicate our inner motivations. The reasons for the decision of an AI (Artificial intelligence), on the other hand, are much more difficult to comprehend. An AI model often arrives at a result based on completely different causes than a human would.

The information about why an algorithm decides what it does is often contained in an incredibly complex and, for humans, initially opaque binary yes-no game. Understanding this game in a way that we can understand and describe it is an important challenge for users and developers of AI models, because AI applications are becoming more and more part of our everyday lives.

Especially for users without deeper background knowledge about how AI models work, the decisions of these models can seem almost magical. This is probably one of the reasons why many people not only do not trust AI, but also have a certain aversion to it. Improving the interpretability of AI models must therefore be an essential part of the future of these technologies.

Reliable systems must be explainable

Especially in safety-critical applications for AI, it is enormously important for legal and ethical reasons alone to be able to understand algorithmic decisions. One only needs to think of autonomous driving, Credit scoring or even medical applications: which factors lead to which result and is that the way we want it? For example, we don't want people to be unable to get a loan because of their origin or someone to be run over by a self-driving car because of the wrong reasons. Even for less critical decisions, the interpretability of the model, as already described, plays a role: whether it is to create trust in the predictions of the model or simply to find out the reasons for the value of a forecast.

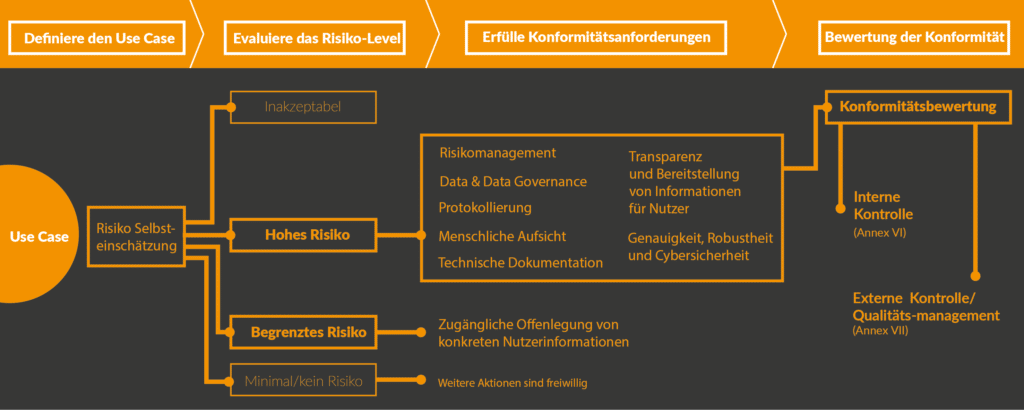

Therefore, the use of AI-based systems is subject to the basic rules of any other software system in order to avoid disinformation, discrimination and to ensure the protection of personal data. The European Union is discussing a complementary draft law for the specific assessment and regulation of AI systems - the AI Act. The draft, which is expected to come into force in 2025, provides for AI systems to be classified into 4 categories: no risk, low risk, high risk and unacceptable risk. In order to use AI as a business, the use of high-risk systems is necessary in many industries: all applications that affect public safety or human health are considered high-risk systems here - but the definition is broad.

Managing and evaluating AI use cases in one's own company is time-consuming - especially if AI is used productively in the company. Clear rules and test procedures are lacking. By means of a use case management tool such as Casebase risks can be minimised. For example, for the following scenarios:

- Unsupervised AI applications in operation

- Undocumented AI Use Cases

- High or unacceptable risk systems not on the radar

- Penalties by the supervisory authorities

- Image damage

Requirements such as detailed documentation, appropriate risk assessment and mitigation as well as supervisory measures are indispensable for a sustainable explainability of the models. Only in this way can the high requirements for AI use cases be met.

The Black Box

Especially Deep Learning is sometimes referred to as a black box. A black box is a system in which we know the inputs and outputs, but have no knowledge of the process within the system. This can be illustrated quite simply: A child trying to open a door has to push down the door handle (input) to cause a movement of the lock latch (output). In doing so, however, it does not see how the locking mechanism inside the door works. The locking mechanism is a black box. Only when you shine a light inside does it have a chance to shed light on the darkness.

Why Explainable AI?

Actually, we should be used to dealing with systems that are not transparent from the outside in many situations in life. An almost infinite complexity surrounds us constantly. We pick an apple from the tree, although we do not understand how exactly which molecule interacts with which molecule during its growth. So it seems that a black box in itself is not a problem. But it is not that simple, because we humans have learned in our evolutionary history through a lot of trial and error what we can trust sufficiently and what we cannot. If an apple meets certain visual criteria and also smells like an apple, we trust the product of the apple tree. But what about AI?

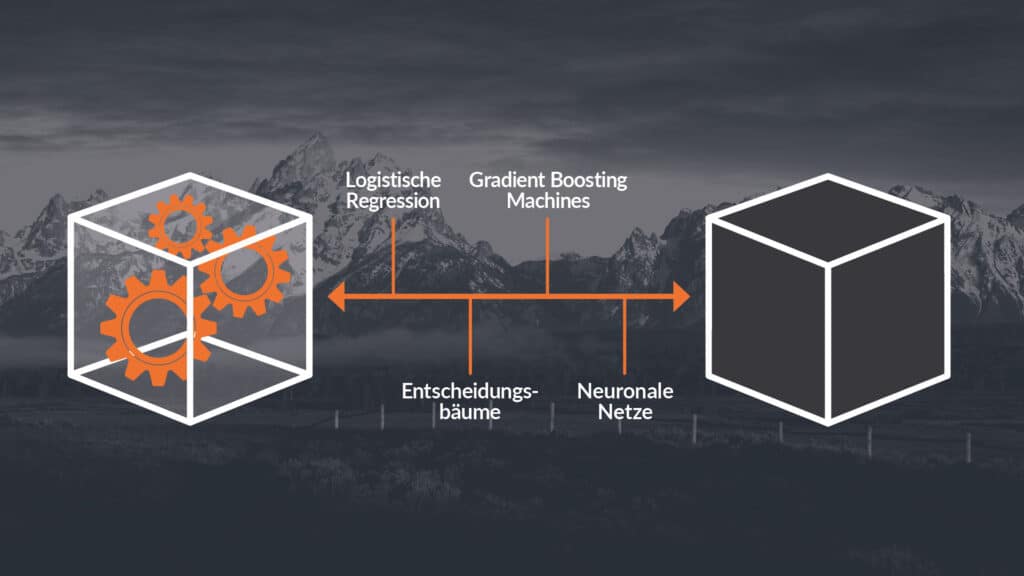

We cannot always draw on a millennium of experience. Often we cannot intervene in the selection of inputs to find out how to influence the output (less water -> smaller apple). However, as soon as we are dealing with an AI system for which we often only provide input unconsciously, things get trickier: users of AI systems that are already running can only try to explain how the system makes its decisions through a limited number of past decisions of the system. Which parameters and factors play a role in this can only be tested or estimated at great expense - this is where Explainable AI (XAI) comes into play. XAI describes a growing number of methods and techniques to make complex AI systems explainable. Explainable AI is not a new topic. There have been efforts in the field of explainability since the 1980s. However, the steadily growing amount of application areas as well as the progressive establishment of ML methods has significantly increased the importance of this research area. Depending on the algorithms used, this is more or less promising. A model based on linear regression, for example, is much easier to explain than an artificial neural network. Nevertheless, there are also methods in the XAI toolbox to make such complex models more explainable.

Progress on the hardware side also facilitates access to explanatory models enormously. Today, it is no longer as time-consuming to test thousands of explanations or perform numerous interventions to understand the behaviour of a model as it was twenty years ago.

Examples of XAI in practice

So in theory, with XAI methods and the right know-how, even complex black-box models can be at least partially interpreted. But how does it look in reality? In many projects or use cases, interpretability is an important pillar of the overall success. Security aspects, lack of trust in the model results, possible future regulations as well as ethical concerns ensure the need for interpretable ML models.

Not only we at [at] have recognised the importance of these topics: European legislation is also planning to make interpretability a mandatory feature of AI applications in safety-critical and data protection-relevant fields. In this context, we at [at] are working on a number of projects that use new methods to make our AI applications and those of our customers interpretable. One example is the AI Knowledge project funded by the Federal Ministry for Economic Affairs and Climate Protection with 16 million euros. Here we are working on it with our partners from business and research, AI models for autonomous driving explainable. Closely related to this topic is reinforcement learning (RL), which has been very successful in solving many problems, but unfortunately is often a black box. For this reason, our staff are working on another project to make RL algorithms interpretable and secure.

In addition, our Data Scientists on a toolbox to make the SHAP method described usable even for very complicated data sets. All in all, we at [at] can offer a very well-founded catalogue of methods for the interpretability of AI models, derived from current research. Be it the development of a new and interpretable model or the analysis of your existing models. Please feel free to contact us if you are interested.

0 Kommentare