Reinforcement Learning (RL) is an increasingly popular machine learning method that focuses on finding intelligent solutions to complex control problems. In this blog article, we explain how the method works in principle and then show the concrete potential of reinforcement learning in two subsequent articles.

Reinforcement learning can be used for very practical purposes. Google, for example, uses it to control the air conditioning in its data centres and was able to achieve an impressive result: "The adaptive algorithm was able to reduce the energy needed to cool the servers by around 40 percent". (Source: Deepmind.com) But how does reinforcement learning work?

Inhaltsverzeichnis

What is Reinforcement Learning?

Translated, reinforcement learning means something like reinforcement learning or reinforcement learning. reinforctive learning. In general terms, machine learning can be divided into Unsupervised Machine Learning and Supervised Machine Learning. RL, in addition to the two methods mentioned, is considered to be One of the three machine learning methods.

In contrast to the other two methods, reinforcement learning does not require any data in advance. Instead, they are generated and labelled in a simulation environment in many runs in a trial-and-error process during training.

Reinforcement Learning as a Method on the Way to General Artificial Intelligence

As a result, reinforcement learning makes a form of artificial intelligence possible that can be used without prior human knowledge. Solve complex control problems can. Compared to conventional engineering, such tasks can be solved many times faster, more efficiently and, in the ideal case, even optimally. By leading AI researchers, RL is seen as a promising method for achieving Artificial General Intelligence designated.

In short, it is the Ability of a machine to successfully perform any intellectual task to be able to do so. Like a human being, a machine must observe different causalities and learn from them in order to solve unknown problems in the future.

If you are interested in the distinction between Artificial Intelligence, Artificial General Intelligence and Machine Learning Methods read our basic article on the topic "KI".

One way to replicate this learning process is the method of "Trial and error. In other words, reinforcement learning replicates the learning behaviour of trial-and-error from nature. Thus, the learning process has links to methods in psychology, biology and neuroscience.n on.

In our Deep Dive, we highlight the interactions between business methods, neuroscience and reinforcement learning in artificial and biological intelligence.

How reinforcement learning works

Reinforcement Learning stands for a whole Series of individual methods, where a software agentt independently learns a strategy. The goal of the learning process is to maximise the number of rewards within a simulation environment. During training, the agent performs actions within this environment at each time step and receives feedback.

The software agent is not shown in advance which action is best in which situation. Rather, it receives a reward at certain points in time. During training, the agent learns to assess the consequences of actions on situations in the simulation environment. On this basis, he can make a Long-term strategy develop to maximise the reward.

The goal of reinforcement learning: the most optimal policy possible

Simply put, a policy is the learned behaviour of a software agent. A policy specifies which action should be taken for any given behavioural variant (Observation) from the learning environment (Enviroment) is to be executed in order to obtain the reward (Reward) to maximise.

How can such a policy be mapped? For example, a so-called Q-Table can be used. A table is built with all possible observations as rows and all possible actions as columns. The cells are then filled with the so-called value values during training, which represent the expected future reward.

However, using the Q-table also has its limitations: it only works if the action and observation space remains small. That is, if the options for action and the possibilities for behaviour are small. If many features or even features with continuous values are to be evaluated by the software agent from the environment, a Neural network necessary to map the values. A common method for this is Deep Q-learning.

In our blog article on the topic Deep Learning we not only explain the method, but also show how it is applied in practice.

In detail, the neural network is combined with the features of the Observation Spaces defined as the input layer and with the actions as the output layer. The values are then learned and stored in the individual neurons of the network during training.

For an in-depth technical introduction to reinforcement learning that gives you a basic understanding of reinforcement learning (RL) using a practical example, see our blog post:

Basic prerequisite for the use of reinforcing learning

When it comes to the practical use of reinforcement learning, the first thing to do is to understand the question properly. Reinforcement learning is not equally the right solution for every task. In fact, there are probably more use cases where other methods are more suitable than reinforcement learning. Which method fits which use case can be determined, for example, in a Use Case Workshop find out.

To find out whether reinforcement learning is suitable for a particular problem, you should check whether your problem has some of the following characteristics:

- Is there a possibility to apply the principle of "Trial-and-Error" to apply?

- Is your question a Control or Control problem?

- Is there a complex Optimisation task?

- Can the complex problem only be solved to a limited extent with traditional engineering methods?

- Can the task be completed in one Simulated environment carry out?

- Is a high-performance simulation environment present?

- Can the simulation environment Influences and whose Status queried become?

Learn how large language models such as ChatGPT are improved through the use of Reinforcement Learning from Human Feedback (RLHF).

Reinforcement Learning from Human Feedback in the Field of Large Language Models

Reinforcement learning - the solution is approximated

Before an algorithm works, many Iterations required. This is partly because there can be delayed rewards and these must first be found. The learning process can be modelled as a "Marcov Decision Process" (MDP). For this, a State space, a Action area and a reward be designed.

Such a simulated learning environment must fulfil an important prerequisite: It must meet the tangible world can reflect in a simplified way. To do this, three points must be taken into account:

- A suitable RL algorithm with, if necessary, a neural network must be selected or developed.

- Define "iteration epochs" and a clear "goal".

- We need to define a set of possible "actions" that an agent can perform.

- Rewards" can be defined for the agent.

Reinforcement learning is an iterative process where systems can learn rules on their own from such a designed environment.

Advantages of reinforcement learning

Reinforcement learning can ideally be applied when a specific Destination is known, but its solution is not yet known. For example: A car should independently get from A to B along the optimal route without causing an accident. Compared to traditional engineering methods However, the human being should not dictate the solution. A new solution will be found with as few specifications as possible.

One of the great advantages of Reinforcement Learning is that, unlike Supervised Machine Learning and Unsupervised Machine Learning no special training data is required. In contrast to Supervised Machine Learning can New and unknown solutions emerge, rather than just imitated solutions from the data. Achieving a new optimal solution unknown by humans is possible.

Challenges of reinforcement learning methods

If you want to use reinforcement learning, you need to be aware that there are some challenges involved. First and foremost, the learning process itself can be very computationally intensive be. Slow simulation environments are often the bottleneck in Reinforcement Learning projects.

In addition, defining the "reward function" - also known as the Reward engineering is not trivial. It is not always obvious from the outset how the rewards are to be defined. Furthermore, the Optimise of the many Parameter very complex. Also the definition of observation and action space is sometimes not easy.

Last but not least, reinforcement learning also involves the dilemma of "Exploration vs. exploitation" play a role. This means that the question always arises as to whether it is more worthwhile to take new, unknown paths or to improve existing solutions.

Deepen your understanding of the concept of the "Deadly Triad" in reinforcement learning, its implications and approaches. This Deep Dive provides you with an overview of RL concepts, introduction of the "Deadly Triad" and its coping strategies.

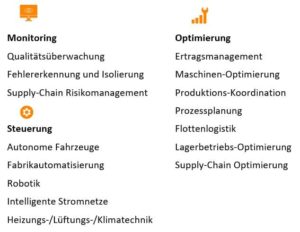

Reinforcing learning in practice: use cases in industry

In order to get a better feel for the possible applications of reinforcement learning, we have included some more Examples from practice compiled. The following overview first shows the broad spectrum of tasks as a whole. Reinforcing learning can be classified within the three categories "Optimisation", "Control" and "Monitoring" can be applied.

Google controls the air conditioning with reinforcement learning

Google is known for being at the forefront of AI development. Reinforcement learning also plays an important role. Google uses this method in the Direct current cooling a. The background: Google operates huge data centres that not only consume an enormous amount of electricity, but also generate extremely high temperatures. To cool the data centres, a complex system of air conditioning used.

With this, Google was able, through the use of its adaptive algorithm, to Energy costs for server cooling by Reduce by 40 per cent.

Reinforcement learning helps to control and steer this complex, dynamic system. There are not insignificant Security restrictions and Potential for a significant improvement in the Energy efficiency.

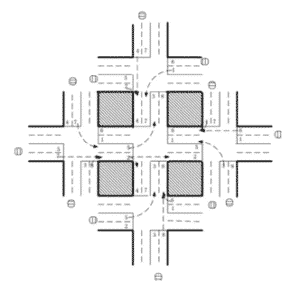

Traffic light control in an intelligent traffic management system

Equally complex and extremely prone to disruption is our road network and the Traffic guidance system. Above all, the intelligent control of traffic lights is a great challenge. Reinforcement learning is ideally suited to solve this problem. In the paper "Reinforcement learning-based multi-agent system for network traffic signal control". researchers attempted to develop a Traffic light control to develop a solution to the congestion problem.

Reinforcing learning in the logistics industry: inventory management and fleet management

The Logistics sector is excellently suited for reinforcement learning due to its complexity. This can be seen on the one hand in the example of Inventory management make clear. Reinforcement learning can be used, for example, to reduce the lead time for stock levels as well as ordering products for optimal use of the available space of the warehouse operation.

Reinforcement learning is also used in the field of fleet management. Here, for many years, the aim has been to solve one of the main problems, the "Split Delivery Vehicle Routing Problem" (SDVRP). In the traditional Tour planning a fleet with a certain capacity and a certain number of vehicles is available to serve a certain number of customers with a known demand. Each customer must be served by exactly one vehicle. The aim is to Total distance minimise.

In the case of the routing problem with split, i.e. divided delivery vehicles (SDVRP), the restriction that each customer must be visited exactly once is now removed. Say: split deliveries are permissible. Reinforcement learning can solve this problem so that as many customers as possible are served with only one vehicle.

Reinforcement learning in the retail industry

Dynamic pricing is an ongoing and time-critical process in certain sectors such as e-commerce. Reinforcement learning is key when it comes to creating an appropriate strategy for prices depending on supply and demand. This allows the Product turnover and Profit margins maximise. Pricing can be trained on the historical data of customers' buying behaviour to provide suggestions in the product pricing process.

Read about the use of reinforcement learning in industry and other relevant sectors in our technical article:

Conclusion: reinforcement learning has enormous potential for disruption

Reinforcement learning is particularly fascinating for a reason. The method has very close ties to psychology, biology and the neurosciences. Similar to us humans, algorithms can develop abilities similar to ours with this learning method. The Basic principle is always "Trial-and-Error". With this comparatively simple principle complex control and optimisation problems can be solved that are difficult to realise with traditional methods.

Reinforcement learning is one of the most interesting and rapidly developing fields of Research areas. The step into the Practice is gaining momentum and can make the difference in competitive advantage. With a suitable simulation environment and a reward system, reinforcement learning can lead to impressive results. Provided there is a suitable question and an AI strategy in which reinforcement learning can be embedded.

Frequently asked questions about reinforcement learning

Reinforcement learning (RL) differs from other types of learning, such as supervised and unsupervised learning, in its basic approach and paradigm. In contrast to supervised learning, where a model learns from labelled examples, and unsupervised learning, where the model attempts to learn patterns and structures in

Unlike unsupervised learning, where the model tries to find patterns and structures in unlabelled data, RL is about training agents to make sequential decisions in an environment by interacting with it and receiving feedback in the form of rewards or punishments. The RL agent explores the environment through trial and error, learning from the consequences of its actions and trying to maximise a cumulative reward signal over time, rather than having explicitly correct answers or predefined structures. This trial-and-error nature of RL allows it to cope with dynamic, complex and uncertain environments, making it suitable for tasks such as games, robotics and autonomous systems.

Reinforcement Learning (RL) has its roots in behavioural psychology and early work on learning theories, but its modern development can be traced to the pioneering work of researchers such as Arthur Samuel in the 1950s and Richard Sutton in the 1980s. Samuel's pioneering work in developing a self-learning checker game programme laid the foundation for the trade-off between exploration and exploitation and for learning from interactions in RL. Sutton's research in time-difference learning and Q-learning algorithms further developed RL methods. With the integration of neural networks in the 1990s and significant breakthroughs in Deep Reinforcement Learning in the early 2010s, the field continued to evolve, as evidenced by the success of DeepMind's DQN algorithm in learning Atari games. As computing power and data availability increased, RL found applications in various business domains. Its usefulness in business use cases became clear as RL algorithms demonstrated impressive capabilities in optimising online advertising, recommender systems, dynamic pricing, inventory management and other decision-making problems with complex and uncertain environments, leading to its adoption and exploration in numerous business environments.

If so, how? Yes, reinforcement learning (RL) is widely used in modern AI applications and has become very important in recent years. RL has been shown to be effective in solving complex decision problems where an agent learns to interact with an environment to maximise cumulative rewards over time. In modern AI applications, RL is used in various fields such as robotics, autonomous systems, gaming, natural language processing, finance, healthcare, recommender systems and more. For example, RL is used to train autonomous vehicles to navigate in real-world environments, optimise energy consumption in smart grids, improve the dialogue capabilities of virtual assistants, and even discover new drug molecules in the pharmaceutical industry. With advances in algorithms and computational power, RL continues to find new applications and shows promise for solving complicated problems in various industries.

Reinforcement Learning?

Applying reinforcement learning (RL) to business problems involves three key steps: first, defining the characteristics of the RL problem, including identifying the state space, which represents the relevant variables that describe the business environment; the action space, which outlines the feasible decisions that the RL agent can make; and the learnable strategy, which specifies how the agent's actions are selected based on the observed states. Secondly, it is crucial to find the appropriate reward function as it determines the behaviour of the RL agent. This may require reward engineering to design a function that maximises the desired outcomes while minimising the risks and potential pitfalls. Finally, creating a simulation environment is essential for effective training of the RL model. This simulation provides a safe space for the agent to explore and learn from interactions without impacting the real world, allowing for efficient learning and fine-tuning before the RL solution is deployed in the actual business context.

Once you have identified a problem that RL could solve, collected data and selected an RL algorithm, you can evaluate the potential of RL for your business by:

1. prototyping. Use (or develop) a simplified digital twin or simulation to train an initial experimental reinforcement learning agent that interacts with this environment. This way you can see how the agent behaves and identify potential problems.

2. estimate the costs and benefits of RL. It is important to assess the operational costs and benefits of reinforcement learning agents before moving the solution into production. The benefits of RL could include improved performance, reduced costs or increased customer satisfaction. Based on your assessment, you can decide whether to implement RL in your organisation.

3. RL timetable. After deciding whether reinforcement learning is the right solution for your problem, it is important to develop a roadmap for training, evaluating, deploying and maintaining your RL agent in your production system.

Some best practices for applying RL to business use cases are:

1. start with a simple problem. It is often helpful to start with a simplified problem when applying RL to business use cases. This will help you understand the basics of RL and identify the challenges you need to overcome.

2. use a simulation. If possible, it is helpful to test your RL agent with a simulation. This way you can test your agent in a controlled environment and make sure it works properly.

3. use a scalable framework. If you plan to use your RL agent in production, it is important to use a scalable framework. This way you can train and deploy your agent on a large scale.

A digital twin is a virtual representation or simulation of a real object, system or process. It captures the data and behaviour of its physical counterpart in real time, enabling continuous monitoring, analysis and optimisation. In the context of reinforcement learning (RL) for business use cases, a digital twin is critical because it provides a safe and controlled environment for training RL agents. By simulating the business process or environment in a digital twin, RL algorithms can explore and learn from interactions without risking real-world consequences. This enables more efficient learning, faster experimentation and fine-tuning of decision strategies, leading to improved performance and optimised results when the RL agent is used in the actual business context. The digital twin reduces the risks associated with using RL, minimises potential disruptions and helps organisations make informed decisions, making it a valuable resource when applying RL to solve complex business challenges.

Some of the most important RL algorithms for business applications are:

1. deep Q-learning (DQN): DQN is a powerful algorithm that can be used to solve a variety of problems. It is particularly well suited for problems where the environment is partially observable.

2. trust region policy optimisation (TRPO): TRPO is a robust algorithm that can be used to solve problems with high-dimensional state and action spaces.

3. proximal policy optimisation (PPO): PPO is a newer algorithm that is often considered the most modern algorithm for RL. It is particularly well suited for problems with continuous action spaces.

4. Asynchronous Advantage Actor-Critic (A3C): A3C provides stability and generalisation across different tasks and environments through an actor-critical architecture. It offers parallelisation for faster convergence and better exploration in reinforcement learning.

Reward engineering is the process of developing a reward function that effectively guides an RL agent to learn the desired behaviour. This can be a difficult task as the reward function must be both informative and challenging enough to encourage the agent to learn the desired behaviour.

Reward hacking is a phenomenon that occurs when an RL agent learns to exploit an imperfect reward function to maximise its own reward, even if this behaviour does not correspond to the desired behaviour. This can be a problem as it can lead to the agent learning behaviours that are not actually beneficial.

There are important challenges associated with reward engineering, including:

1. the definition of the desired behaviour: It is often difficult to precisely define the desired behaviour that an RL agent should learn. This can make it difficult to design a reward function that effectively guides the agent towards the desired behaviour.

2. reward hacking: It is important to design reward functions that are robust to reward hacking. This means that the reward function should not be easily exploited by the agent to learn unintended behaviours.

There are several ways to avoid reward hacking, including:

1. use of a complex reward function: A complex reward function can be less easily exploited by the agent.

2. use a reward function based on multiple objectives: A reward function based on multiple goals is less easily manipulated by the agent.

3. use a reward function that is adaptive: An adaptive reward function can be adjusted over time to prevent the agent from exploiting it.

No, RLHF can lead to better results, but it is not always the case. Since RL algorithms are resistant to bias to some extent (depending on the reward technique), more human bias may be introduced into the RL agent when performing RLHF. It is also possible that the human experts have a lack of knowledge that may reduce the performance of the agent. The effectiveness of RLHF depends on the quality and relevance of the feedback. If human feedback is noisy, inconsistent or biased, this can lead to suboptimal or even detrimental performance. Developing effective feedback mechanisms and ensuring reliable and informative comments are critical to the success of RLHF.

Some advantages are:

1. sampling efficiency: RLHF can significantly improve sampling efficiency compared to traditional RL methods. By using human feedback or demonstrations, RLHF can more effectively guide the learning process and reduce the number of interactions with the environment required to learn a successful strategy.

2. faster convergence: Incorporating human feedback can help the RL agent learn a good strategy faster. Instead of relying only on random exploration and trial-and-error, RLHF can use valuable information from human experts to speed up the learning process.

3. safe learning: In situations where the exploration of the surroundings

could be risky or costly (e.g. autonomous vehicles or healthcare), RLHF can enable safe learning. Human feedback can help prevent the agent from taking dangerous actions, reducing the likelihood of catastrophic errors during the learning process.

4. guidance through human expertise: RLHF enables learning agents to benefit from human expertise and knowledge. Humans can provide high-quality feedback so that the agent can learn from the accumulated experience of experts, leading to more effective strategies.

In summary, RLHF offers advantages in terms of sampling efficiency, faster convergence and safe learning thanks to guidance from human expertise. However, its effectiveness depends on high-quality feedback and faces challenges in scaling, avoiding bias and achieving generalisation

Some resources to learn more about RL are:

1. the course "Reinforcement Learning" by David Silver: This is a free online course that provides a comprehensive introduction to RL.

2. the textbook by Sutton and Barto: This is a classic textbook on reinforcement learning.

3. the OpenAI Gym: This is a collection of RL environments that can be used to test RL algorithms.

4. the RL Reddit forum: This is a forum where RL researchers and practitioners can discuss RL issues and exchange ideas.

There are several Python libraries that are useful for RL, including:

1. TensorFlow: TensorFlow is a popular deep learning library that can also be used for RL. It offers a range of tools and resources for RL researchers and practitioners.

2. pyTorch: PyTorch is another popular deep learning library that can also be used for RL. It is similar to TensorFlow, but has a different syntax.

3. OpenAI Gym: OpenAI Gym is a collection of environments that can be used to test and evaluate RL algorithms. It offers a variety of environments including games, simulated robots and financial markets.

4. RLlib: RLlib is a library that provides a high-level interface for building and training RL agents. It is easy to use and scalable.

5. stable baselines: Stable Baselines is a library that provides implementations of a number of RL algorithms, including DQN, PPO and TRPO. It is easy to use and efficient.

6. keras-RL: Keras-RL is a library that provides a way to create and train RL agents with Keras. It is a good choice for researchers who are familiar with Keras.

7. muJoCo: MuJoCo is a physics engine that can be used to create realistic RL environments. It is a good choice for researchers who need to create realistic environments for their experiments.

8 Ray RLlib: Ray RLlib is a distributed RL library built on top of RLlib. It is designed to be scalable and efficient for running RL agents on large datasets.

0 Kommentare